🪄My first-hand account of training TikTok to steal my essence

Any sufficiently advanced algorithm is indistinguishable from magic

Algorithms guide many aspects of our lives, from our everyday choices (what movie to watch, what verb tense to use while writing) to the decisions that others make about us (who is fit to be paroled or who will be filtered out of a job search). But lately, one digital platform algorithm has received a lot of attention for what it’s capable of, both for good and for ill.

Journalists are digging into what makes TikTok recommendations so powerful

Josh experiments to see if TikTok can “figure him out” from 2 hours of watching

Wilfred reflects on a notable analog recommendation engine: the school librarian

Open TikTok for the very first time, and within minutes the “For You” page is recommending short videos. The only navigational tool is what you might have in dating apps: pull down to skip to the next video, or tap and hold to choose “Not interested” and see fewer videos like the one you’re watching.

Once uploaded, every video is automatically labeled with attributes. Once the app starts observing which videos you watch and how long you watch them for, your watching habits can be matched to the attributes in the videos that you watch. Very quickly, the algorithm can gain a spookily accurate perception of your interests, and maybe more than that. Both Reply All and the Wall Street Journal recently investigated TikTok’s recommendation engine. Reply All focused on how the For You page can seemingly unearth thoughts from deep within one’s psyche. New Yorker columnist Kyle Chayka has said, “I believe the For You page is the haven of our deepest secrets, the true algorithmically determined root of our identities, and thus should be kept private: a For You Only page.”

On the podcast episode, a Reply All producer interviewed her sister, who has a unique biological condition: she can’t burp normally. She had never met another person with the same issue. And yet somehow, the TikTok algorithm served her up a video by another woman who also could not burp. For the sister, the video made her feel truly seen, and for the first time, able to connect to others with the same concerns.

The WSJ took a different approach. They trained the algorithm with over a hundred bots that went down rabbit-holes and in some cases ended up in disturbing places. For example, a bot programmed to be interested in ‘sadness’ and ‘depression’ was served up more and more of that content, with no limits or reservations, until almost everything on the For You page was sad and depressing. And this process did not take as long as you might think: “TikTok fully learned many of our accounts’ interests in less than two hours. Some it figured out in less than 40 minutes.”

When reached for comment, a TikTok representative responded that real users have more diverse interests than programmed bots. I’ve never used TikTok, but I’m a real person with diverse interests. Could the app figure me out in two hours? And so, despite Chayka’s warning, I started down a rabbit-hole of my own, chronicled below. Over the course of a few days, I spent the length of a feature film on the app. I did not list any interests, like any posts, or follow any accounts. As far as I know, all I gave the app to work with was my genuine attention, IP address, and a lot of swiping.

Curiouser and curiouser!

First half hour:

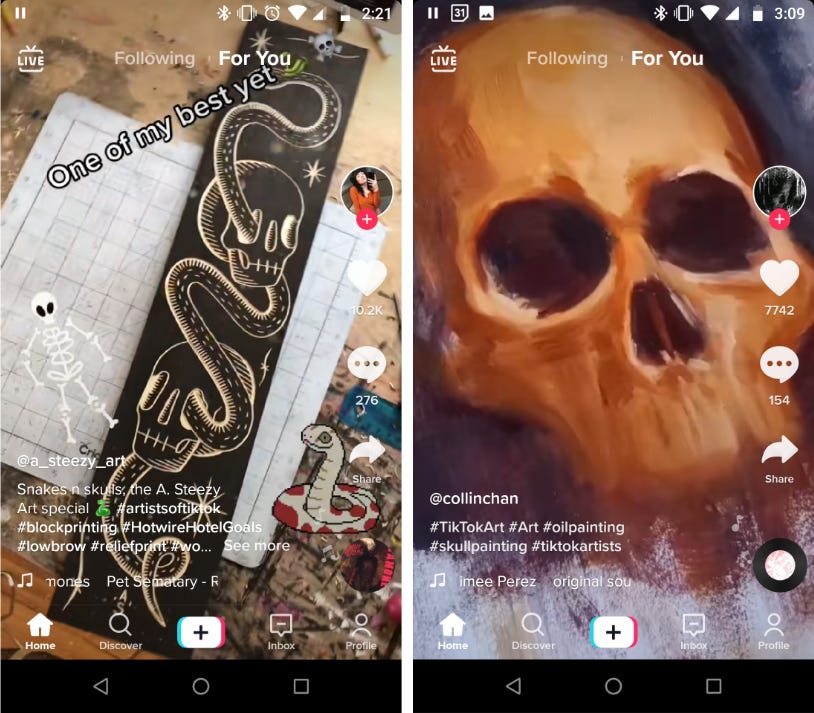

I know from the WSJ’s reporting to expect that at first, the app would serve up a lot of very popular (millions of likes) videos that have been pre-screened by human moderators. My first impression is that there are a lot of ads! At first blush, it doesn’t seem like there’s a lot for me here. I see one video clearly from Maryland (I’m in D.C.), but that’s the only sign that they’re using my IP address. The first interesting thing I see is a Jack Black video. I’ve loved his band, Tenacious D, since high school. But more than likely, this is a coincidence — he’s a movie star with a huge presence on TikTok. After a half hour, TikTok seems to know I like cats, art and food — I have two cats and I’ve worked in art and food — but so do millions of other people in the world.

After an hour:

I decide to test the Not Interested button because I’m seeing a lot of prank videos that joke about violence, which I don’t like. I use the button and right away that kind of thing seems to vanish, seemingly for good. Now I’m occasionally starting to see videos with fewer than 100,000 likes, and the videos are getting a bit weirder, in a good way. Towards an hour in, I’m finally getting away from constant cat videos. I’m surprised I haven’t seen any drawing yet because I’m an artist, but maybe that’s asking too much. I’m wondering if this experiment will work.

After an hour and a half:

A video begins and a man says, “so I started working with Mexicans today and…” I decide in the moment to use the Not Interested button again, and it’s robust enough to keep me from seeing more stereotypical race-based humor, which is what I was going for. There are more art videos coming now, with a lot of painting. A lot of the early cooking videos I was getting were microwave hacks or instructionals for making dips with whole blocks of cream cheese. Now I’m getting recipes for things like pickled broccoli stems, which is closer to the experimental home cooking I like to do. I also get my first video about urbanism, a topic I follow closely. But there are still some off-signals: I’m getting a lot of ADHD and depression/anxiety content, which honestly are not right for me. I really enjoy a video about “chaos gardening.” Surprisingly, the cats are completely gone, and I miss them a little. It’s still not exactly “me,” but it’s way closer than before!

After two hours:

I’m getting a lot more art than before, including watercolor, which I paint, and even a video referencing Studio Ghibli — I love those movies. It’s satisfying to get shown kinds of skilled art and craftsmanship I’ve never seen before. I see my first political video, about #StopLine3, an issue I know very little about but am interested in. I’m also getting tiny house and DIY content that I might not have picked, but if I’m being honest, I really enjoy it. There are videos showing the kinds of things I’ve seen recommended many times on Kottke, my favorite blog. I’m also now regularly seeing videos made by far less popular users, some with only a few hundred likes. There are still videos I’m not crazy about, like skits where teens do an impression of their mom, but overall I’m enjoying what I’m seeing a lot more. I’m somewhat surprised that some of my longest-held professional interests, like cartooning and cheesemaking, haven’t surfaced, but I have no doubt that they would eventually.

At the end of this experiment, I am enjoying how much genuine creativity there does seem to be on TikTok, as well as videos that I’m really happy to see or that make me laugh. But now there’s one dominant feeling — I want to watch more. I think that’s the important point: Not only can TikTok be addictive, it may serve you more and more of whatever it thinks you want, even if that’s terrible for you, like misinformation or self-harm. The further you get into your For You page, the further away you are likely getting from human-moderated content. I think people have the right to watch whatever they want, and Tiktok has the right to try and make money off it, but what moral responsibility does the platform have if it plays a role in a person’s mental deterioration or radicalization? There is some question about whether one’s online viewing habits can cause thinking to change, but for me anyway, uncertainty is not an excuse. I want to see platforms experimenting with guardrails or other mitigating design elements for when they notice people deep diving in potentially dangerous waters. This is an issue I’m looking forward to continuing to think and write about here.

So how well does TikTok know me after two hours — 687 videos — of just watching? I’m definitely impressed. It’s a little bit like taking a personality test or getting your fortune read: It’s amazing to be told who you are and recognize some truth in it, even if you’re the source of that information in the first place. I’m not yet altogether spooked, but I suspect that if I keep on using the app, that moment I’ve heard about it — when I’ll wonder if there’s magic or spycraft at work — is inevitable. What about you? If you use TikTok, I’d love to hear how you think the algorithm compares to other platforms. Is this the beginning of a new era of eerily-personal platforms? Or is this just a tweak on the same formula we’ve seen for years now? Comment below and let me know.

– Josh Kramer

"And what is the use of a book,” thought Alice, “without pictures or conversations?”

The first “recommendation engine” I encountered was my public school librarian. Twice a week my classmates and I would get an hour to wander the library’s aisles and pick books to take home. The librarian knew each of us by name and what we had been reading. I was in third grade, had just finished the first Harry Potter book and the sequels hadn’t been published yet. I told her I wanted more. She suggested Brian Jacques’ Redwall, a series about peaceful mice and rabbits overcoming warlike weasels and snakes. A year later, after I had devoured all of Jacques’ novels, my librarian proposed The Lord of the Rings: she loved it as a kid, and it had similar themes and heroes and villains. And the year after that, when I was through with those, she said I was ready for the most advanced novels in the school library: Phillip Pullman’s His Dark Materials — not only a good vs. evil story, but challenging the very concepts of good and evil.

The idea of a recommendation engine is simple: it collects data about the content we like and the things we do. Then it predicts what we might like by looking for content similar to the content we like (content-based filtering) and by comparing us to other users who act similarly to us (collaborative filtering). On a basic level, this is what my librarian was trained to do. It’s quite easy to imagine that an AI would have been able to come up with the same recommendations she did. But unlike an algorithm, my librarian was invested in me. She wasn’t just feeding me content, but participating in my growth. She shared a part of herself with me. She was my model for what it meant to be a reader. At New_ Public, we’re wondering: who plays the role of the librarian on the internet? Do we need to create it? How do digital recommendation engines complicate (or complement) that role?

– Wilfred Chan

Interacting with TikTok was prompting me to try to see myself as the app does, and by extension to reimagine myself in terms of the pleasures it presumed I was deriving from its content. Would I eventually actually want what it had to offer? Would I see that as my own desire? Or would I still just be desiring myself through the lens of those recommendations? Is there even any difference between those things? When I wrote about TikTok before, I had already primed myself to come to this sort of conclusion. What remains unimaginable to me, still, is that I would actually want to watch these videos for their own sake, without the algorithmic intrigue. So I remain convinced that the point of TikTok is to teach us to love algorithms over and above any content, and to prepare us to accept an increasing amount of AI intervention into our lives. It seems designed to program users with a form of subjectivity appropriate to algorithmic control: where coercion is merged with an experience of "convenience" as one's desires are inferred externally rather than needing to be articulated through our own conscious effort.

– Rob Horning, Internal exile

Flash Forward

Don’t forget about the Flash Fiction Contest! Find more info here (scroll down a bit). Email us your stories at hello@newpublic.org with ‘Flash Fiction’ in the subject line. Good luck!

Deadline: 9/1/21

Theme: Social Media

Word Limit: 500 words

Prize: Original illustration, publication on newsletter

Next Week

We’ll dig into the survey results, and take a look at what has changed in a year. Plus, an introduction to an ongoing topic of interest.

Nobody's gonna know (they're gonna know),

Josh and Wilfred

Design by Josh

New_ Public is a partnership between the Center for Media Engagement at the University of Texas, Austin, and the National Conference on Citizenship, and was incubated by New America.