🛑 Stopping viral toxicity before it starts

Can we use machine learning to circuit-break misinformation?

This week at a glance:

🛢 Circuit breakers suggest a way algorithms can keep toxicity off platforms

👩🏽💻 The tech may not be there yet and human moderation is still vital

🤖 Even if they work as intended, circuit breakers are not a panacea

This week we’re exploring circuit breakers:1 an alluring use of machine learning that’s supposed to be able to detoxify the big social platforms, assist human moderators, and stop lies and propaganda in their tracks before they spread, if — and it’s a big “if” — the technology can work as intended.

In August 2020 the Center for American Progress proposed a system for social platforms modeled on Wall Street’s trading curbs, often called circuit breakers. A sudden drop in a stock or market index can trigger a trading halt as short as 15 minutes or as long as the rest of the day, depending on how steep the drop is.

When trading halted in March 2020, New York Stock Exchange President Stacey Cunningham described the circuit breakers as “a precautionary measure that we put in place so that the market can slow down for a minute.” One study found that in 2020, “without the circuit breaker rule, the market would continue to slump by up to -19.98% on the days following the previous crashes. In reality, the rule has successfully prevented the predicted plummet and further stimulated the market to recover for up to 9.31% percent.”

According to CAP, the key takeaway from Wall Street circuit breakers is the introduction of friction by putting in a kind of speed bump. They imagined something similar in spirit could be used by platforms to prevent the spread of Covid misinformation:

Platforms should detect, label, suspend algorithmic amplification, and prioritize rapid review and fact-checking of trending coronavirus content that displays reliable misinformation markers, which can be drawn from the existing body of coronavirus mis/disinformation.

And according to Politico, Big Tech was already deep into an experiment in bringing far more machine learning into identifying harmful and illegal content.

In October 2020, the New York Post published a story about a laptop supposedly belonging to Hunter Biden. But before the link could go viral on Facebook, it was caught by their internal “viral content review system,” a system to prevent viral mis- and disinformation from spreading. The tech giant touted it as a test case that showed how machine learning could react faster than human moderators.

But last August, a man livestreamed his drive to D.C., where he then threatened to set off a bomb outside the U.S. Capitol. He was live on Facebook for five hours until moderators disabled the broadcast. Why didn’t the automation kick in this time?

The appeal of less harmful and illegal content on social platforms is probably self-evident, especially to readers of this newsletter. But there’s also a near-constant crisis on most platforms around the significant toll that reviewing sensitive content takes on workers in trust and safety, sometimes called integrity. Facebook has agreed to pay $52 million in a settlement with moderators who say they developed PTSD on the job, and TikTok was recently sued by a moderator alleging “severe psychological trauma including depression.” It makes sense why companies would want to shift more of this burden to their algorithms.

Since the pandemic, with tens of thousands of content moderators sent home, Politico reported that Facebook, Google, and Twitter have all announced increased automation in content moderation, but they have offered few specifics about its inner workings.2

However, TikTok has offered more detail. Last July, in announcing that TikTok would begin to introduce automation into their moderation process, Eric Han, the company’s Head of US Safety, wrote: “Automation will be reserved for content categories where our technology has the highest degree of accuracy, starting with violations of our policies on minor safety, adult nudity and sexual activities, violent and graphic content, and illegal activities and regulated goods.”

It makes sense that TikTok would use computers to identify relatively straightforward violations, because context can be extremely tricky. Facebook cites bullying as an example of something particularly nuanced and contextual. This may also explain why the livestreamed bomb threat (as well as many other examples) failed to trigger a rapid response from Facebook.

More fundamentally: using previous violations to predict potential violations is not a perfect system. According to Politico, after Facebook, Google, and Twitter increased automated moderation in 2020, there were fewer post removals, more successful content appeals — and more hate speech. Similarly, TikTok saw takedown disputes go through the roof after their change last summer.3

But perhaps the best indication that this technology might not be ready for primetime comes from the hundreds of Facebook moderators who wrote this open letter in late 2020: “Management told moderators that we should no longer see certain varieties of toxic content coming up in the review tool from which we work— such as graphic violence or child abuse, for example.” However, “the AI wasn’t up to the job,” they write. “Important speech got swept into the maw of the Facebook filter—and risky content, like self-harm, stayed up.” (Facebook responded, but not about the automation, and they have been pretty quiet about their “viral content review system” since touting the Hunter Biden story.)

What if we could make these automated moderation systems more accountable to the public? Returning to the Wall Street analogy, there’s another important detail: circuit breakers were not just “put in place” by stock exchanges, but were actually mandated by the Securities and Exchange Commission after the Dow Jones fell 22.6% in the 1987 Black Monday crash. Assuming this technology is refined and improved, could platforms be similarly regulated? Might OSHA set circuit breaker-based labor standards for trust and safety workers?4 And could Congress compel platforms to use automated moderation or lose their Section 230 protection?

Over email, I reached out to Daphne Keller, Director of Program on Platform Regulation at the Cyber Policy Center at Stanford. Keller is an expert on platform regulation and user’s rights. As she notes in a paper on amplification, there’s an important distinction between harmful, yet constitutionally protected speech, and illegal speech, such as terrorism.

Using a circuit breaker to automate the moderation of harmful speech “would be a very complicated thing for the law to mandate, though, since it essentially suppresses distribution of potentially lawful speech at a time when no one knows yet whether it is illegal,” Keller told me. She added that the European Union is “currently contemplating mandating the opposite, in order to avoid the harm from ‘heckler's vetoes’ targeting lawful speech.”

As we often say, there are no panaceas.5 It appears that we are a long way away from platforms employing machine learning so sophisticated that it keeps our human mods healthy and our feeds free of mis- and disinformation. But there’s reason to think we might get closer: Big Tech has been developing these technologies for years, and they have previously successfully applied them to recognizing content as complex as copyrighted songs and child pornography. AI is rapidly evolving, and who knows what it will be capable of in a few years.

In the meantime, perhaps we should turn back to the idea of deliberately introducing friction. Some of our favorite examples of digital public spaces employ a lot of it, from Front Porch Forum’s once-a-day delivery, to some of the best “slow internet” projects. But a lot of friction isn’t ideal for every situation; sometimes it’s important to learn things right away.

The appropriate amount of friction, and the human care required to facilitate it, is worth deeply considering when designing a digital space. I’d love to know what you think: what are great examples of friction in your online life?

More moderation

If you’re interested in this topic, consider reading the Everything in Moderation newsletter, which was part of our recent #Lookoutfor2022 project. It’s a pithy, weekly dive into moderation, written by Friend of New_ Public Ben Whitelaw. Ben is in London and he’s been covering these issues for years as a freelance journalist, so he has a great sense of the long-term and more of a global perspective than we often consider here in the US.

Seeking a Head of Product

In addition to looking to hire a Community Architect and Design Facilitator, we’re now interested in hiring a full-time Head of Product to help us develop the New_ Public Incubator. (!) More details on that to come soon. If you, or anyone you know, specializes in product management and design, as well as growth marketing and people management, please see the job description and apply here.

Getting carried away with the footnotes these days,

Josh

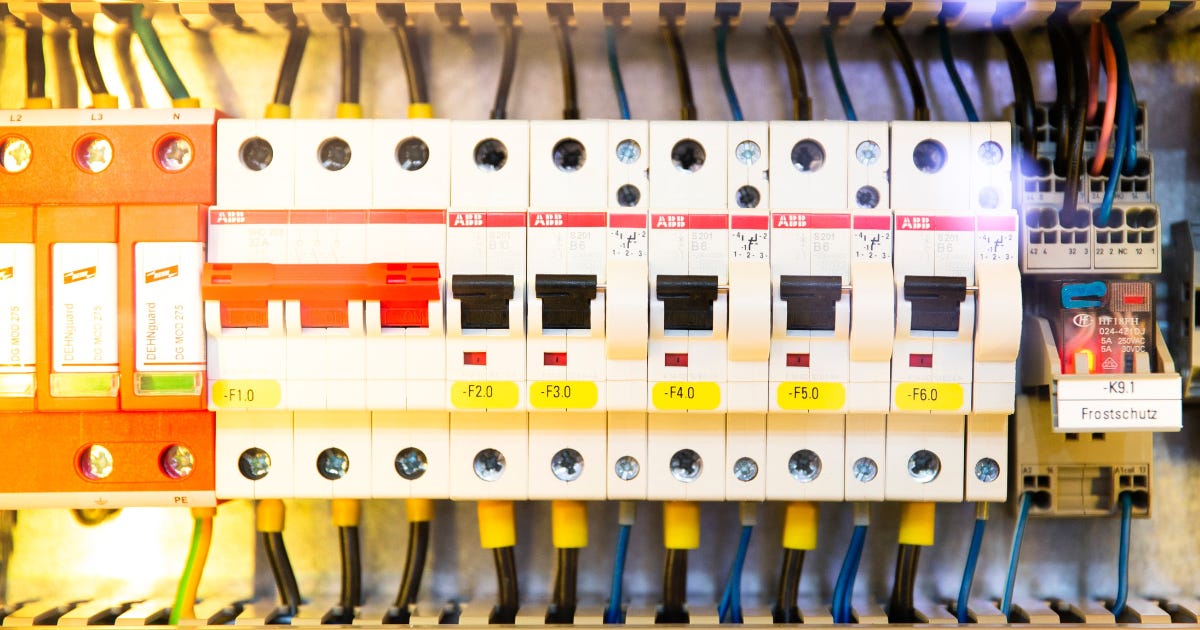

Photos by Markus Spiske on Unsplash

New_ Public is a partnership between the Center for Media Engagement at the University of Texas, Austin, and the National Conference on Citizenship, and was incubated by New America.

Daphne Keller told me in an email: “I'm not sure there is consensus on what counts as a ‘circuit breaker.’ I've mostly heard it used to describe purely quantitative caps in virality... That would limit the spread of dangerous lies, but would also limit the spread of the next Lil Nas X, or activist campaign, etc.” This is obviously a much bigger kind of limit, that would pose an existential threat to nearly all existing social platforms, for better or for worse. In this newsletter, I’m instead using “circuit breaker” as it has appeared in the press.

A serious caveat: platforms are not required to share data. Much of what we know about algorithms and moderation has been volunteered by the companies, discovered by investigative journalists, or leaked by ex-employees. Lawmakers have recently proposed laws to require platforms to make more data available, but so far our understanding is necessarily incomplete.

It’s worth noting that correlation is not causation: we don’t know for sure that increasing automation resulted in these changes, or even what’s a reasonable baseline, because, say it with me: we don’t have the data.

If you have expertise in labor law I’d love to hear what you think, just reply to this email.

This was a rallying cry of Dr. Elinor Ostrom, whose work on “the commons” we look to for instruction and inspiration.