🇺🇸🗳️ Beyond Election Day: how social media shapes politics

Insights on our information ecosystem for the 2024 election

We are seeking a Project Manager to join our Local team.

“DEADLOCK: An Election Story” is a new PBS special where election experts and officials tabletop role-play a hypothetical election scenario. It’s a cool project, intended to facilitate honest conversation and empathy across the political spectrum. Social media plays a part in the game:

On “DEADLOCK,” a deceptive post has gone viral and the social platform must step in to respond. But handling one problematic post, or all of them throughout an election season, is only one piece of a much larger, more complex situation with US political discourse.

Sports fans know that there are two sides to winning — the strategy and talent needed to win each game, but also the long-term planning and focus to build a team season by season. Mostly, when we think about social media and elections, we’re only thinking about the first part: how did this one platform handle this one election? But it’s worth asking, what do we miss by only paying close attention to what’s broken during an election?

Zooming out, it’s clear that there are significant problems with political speech on social media, and platforms can’t just wing it and hold on for dear life in the months before an election. Things were bad in the 2020 US election, and they’re worse now. But going forward, there is a path towards repair and regeneration.

Game time: election season

The 2016 election, which turned out to be quite a mess for social media, sent aftershocks throughout Silicon Valley. Four years later, the platforms, especially Facebook, were motivated to have the 2020 election go as smoothly as possible. And right afterwards, it seemed like social media had gotten “through election night without a disaster,” as The New York Times reported:

There have been no major foreign interference campaigns unearthed this week, and Election Day itself was relatively quiet. Fake accounts and potentially dangerous groups have been taken down quickly, and Facebook and Twitter have been unusually proactive about slapping labels and warnings in front of premature claims of victory.

Thanks to Frances Haugen and subsequent reporting, we now know how much effort Facebook in particular put into securing the 2020 election. Facebook has lots of tools to remove and prevent the spread of false information, lies, and propaganda, and they used them aggressively. According to The Washington Post, this included “reducing the number of invitations a group administrator could send from 100 to 30, and the triggering of a special set of algorithms that would automatically reduce the spread of content the software interpreted as possible hate speech.”

But right after the election, Facebook went back to being the Facebook that “breaks things.” As Ashira Morris wrote here previously, “executives chose growth metrics over creating a positive community space online.” They quickly turned off many measures that had been put in place during the election. They dismantled the effective Civic Integrity group. A lot of trust and safety workers, now seriously burned out, moved on to other projects or jobs. According to Fast Company, on orders from Zuckerberg “the election delegitimization monitoring was to immediately stop.” Victorious, the playoff beards were shaved and players decamped for the off season.

However, the period after the 2020 election was very unusual (although not unpredictable). Groups like Stop the Steal — which had been proactively removed from Facebook during the election — started growing like crazy, with new posts about civil war or revolution appearing dozens of times a day. Propublica later reported, “Facebook proved to be the perfect hype machine for the coup-inclined.” The worst version of the “DEADLOCK” scenario soon played out in real life, culminating in a genuine insurrection at the US Capitol on January 6.

So how has political news on social media evolved since? Unfortunately, instead of making systemic changes that would help with elections, platforms have mostly been moving in the opposite direction.

Trust the process: social media and politics since 2020

The last few years have marked a considerable shift for social media and politics, and the most obvious and attention-grabbing piece is Elon Musk’s takeover of Twitter, along with his total embrace of reactionary Republican politics.

One of Musk’s first moves was cutting workers. In total, 43% of trust and safety jobs at X/Twitter have been cut. And as is painfully obvious to anyone still on the platform, there’s far more hate speech and propaganda there now. It’s worth noting that the Community Notes feature is an important experiment in labeling false information, but nowhere near sufficient to combat the overall toxification of Twitter.

Other social media companies have now followed suit, with Meta, TikTok, Snap, and Discord all cutting trust and safety positions. In some cases, the service has been outsourced to teams with no power that are easily blamed if something goes wrong.

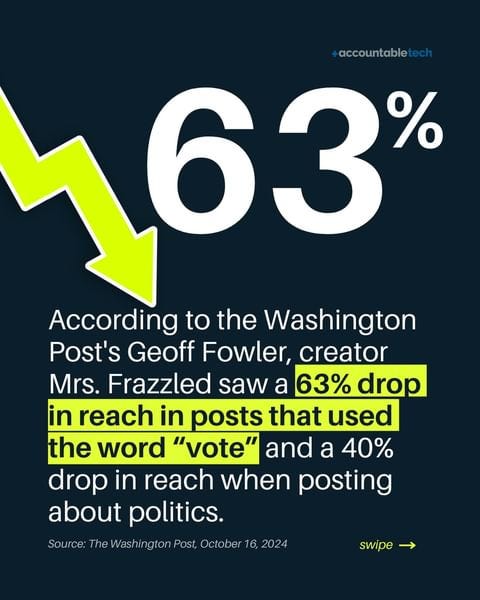

Unfortunately, as opposed to 2020, when Facebook ramped up their efforts to shut down falsehoods and hate, Meta has taken a new approach: less politics all together. Almost immediately after January 6, Facebook began putting plans in motion to scale back political content. Now, the Facebook News tab is gone, and they are explicitly not interested in promoting political content on Threads, their Twitter clone. Even using the word “vote” is a signal for the Meta algorithms to suppress your content.

However, in researching this topic, I was struck a bit by how Meta has undeniably matured over time and is now far more practiced and better equipped to handle complex US election dynamics — if and when they choose to. For example, their cross-check system, once an inequitable white list for important users, has been overhauled over years and is now seemingly far more sophisticated and useful.

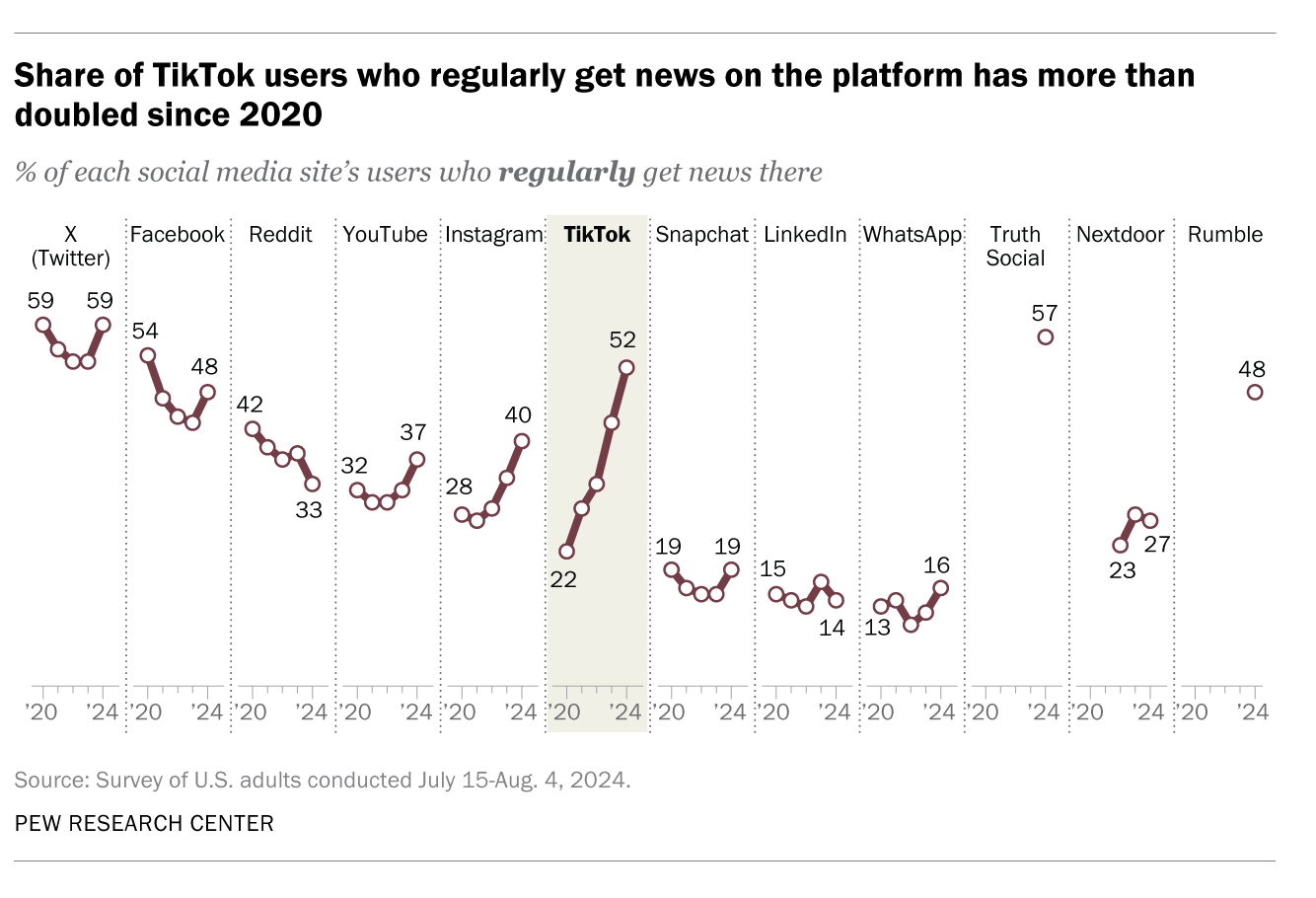

The other huge change in social media has been the ascendance of TikTok, which I’ve written about here previously. TikTok is obviously a giant, used by a majority of Americans under 30, according to Pew. There are now 42 million Gen Z eligible voters, and 39% of that generation gets their news from TikTok. There might not be political ads on the app, but TikTok creators undeniably have a role in shaping political narratives on issues as varied as the war in Gaza and Project 2025.

It’s worth remembering that TikTok’s influence has also changed some of the underlying affordances of social media: TikTok and Threads are far more oriented around what content you watch or interact with. The accounts you actually “follow” intentionally have seemingly never been less important to your experience online. And of course, TikTok itself remains politically charged. And since congress passed a bill that might ban it, the next president will have a lot of latitude in how they choose to deal with TikTok.

The state of the information environment

Now, with the 2024 election tomorrow, the information ecosystem is even more dysfunctional than it was four years ago. Charlie Warzel, writing about the abundant misinformation related to the recent hurricanes in North Carolina, Georgia, and Florida, is nearly despondent in the face of such “depravity and nihilism”:

Scrolling through these platforms, watching them fill with false information, harebrained theories, and doctored images—all while panicked residents boarded up their houses, struggled to evacuate, and prayed that their worldly possessions wouldn’t be obliterated overnight—offered a portrait of American discourse almost too bleak to reckon with head-on.

Nearly everything you need to know about the state of social media in 2024 can be summed up in this post:

Here, a check-marked political staffer shared an AI-generated image and said she didn’t care that it’s fake. Because it’s on X, her false claim was allowed to thrive and spread. And due to misleading posts like this, local officials faced death threats and harassment, distracting them from relief efforts. Volunteers, screaming into the void, amended the post with a strident Community Note.

It’s worth noting that decades into social media, the research into misinformation’s effects on beliefs and ideology is still muddled and inconclusive. This is, in part, because access to data has been limited by the platforms. Some researchers say there just isn’t empirical evidence to support claims that misinformation is causing “massive societal harms.” Practitioners, including researchers within some of these companies, say social media misinfo clearly has measurable negative impacts.

And yet, I think it’s clear, as Warzel illustrates above, that misinformation and disinformation — or as I usually prefer to call them, viral lies, propaganda, and falsehoods — are a significant factor in the overall information ecosystem. It would be foolish to say lies about the 2020 election on Facebook had nothing to do with January 6 — they obviously did. Nothing happens in a vacuum. What you read online is part of your messy experience of an election, mixed together with what you see on the TV and hear from your neighbors and coworkers, interpreted through the lens of what you already believe and care about.

A better framework for thinking about this may actually be rumors and collective sensemaking. And right now, with trust and safety work in decline, political content being suppressed, and fake AI-generated content on the rise, our social media franchises are on a bit of a losing streak, which affects everything else.

But there’s good news — there’s a lot we can change.

How it all could be different

There are a lot of great suggestions out there for how social media platforms could better facilitate information during elections. But many of the ideas are Monday morning quarterbacking, specific tweaks meant for game day. It certainly wouldn’t hurt to display more voting information, confirmed election results, or fact-checked updates. But it’s gonna take bigger ideas, and bolder action, to solve these problems.

This will be familiar if you’re a long-time reader: we need many new options for socializing online, and vastly different ways of thinking.

I like how New_ Public Co-Director Eli Pariser put it on the Techdirt Podcast recently. It’s like we live in a world with no libraries, only bookstores. And in that case, “you don’t blame a bookstore for not being a library,” says Eli. Instead, you figure out how to open a dang library! And once you have a library, you don’t evaluate it in bookstore terms — no one expects 10x or 100x investment returns from a library.

In this case, we don’t even need a drastic reinvention, as we’ve learned from our recent research into Front Porch Forum. They’ve been making progress in a better direction for two decades. Their commitment to slower, purposeful moderation and a less extractive but more sustainable business model are truly inspiring.

And for our part, we believe in the potential of this wider movement towards stronger, more connected communities. We’ve got our Local Lab, piloting new approaches to supporting stewards with resources like our guide, and experimental features like summary newsletters. And we’ve got Public Spaces Incubator, our digital collaboration with six public media institutions worldwide, including new additions ARD in Germany and ABC in Australia.

Remember, we all have agency in how we show up online. The mass exodus from Twitter has fueled the expansion of several new platforms, and there’s no telling what’s next. After this election — no matter the outcome — we all have a say in shaping the information ecosystem to something far more functional and democratic.

Looking over local propositions on my ballot,

— Josh Kramer, Head of Editorial, New_ Public

Dear Josh,

Thank you for your insightful article on social media’s evolving role in political discourse, and especially for emphasizing community-centered solutions. We share your vision for a more democratic, trustworthy information ecosystem, and the idea of fostering healthier digital communities resonates with our own work. However, to truly realize this vision and address the scale of the challenge, we believe that solutions must operate in the shared digital space that lies above the webpage itself, rather than creating yet another siloed platform. To tackle these issues at a societal level, we need to create systemic change that enables conscious, informed choices at scale—something that community-focused initiatives alone might struggle to achieve within the constraints of traditional platform design.

A key issue, one we call “context tampering,” illustrates why broader, infrastructure-level solutions are necessary. Context tampering involves taking statements or events out of context to convey a misleading impression, often shaping public opinion with half-truths that reinforce biases or social divides. Two recent examples highlight how potent this issue has become and how dangerous it is for the information ecosystem and democratic discourse.

First, at Trump's sold-out Madison Square Garden rally, Tony Hinchcliffe’s joke about Puerto Rico’s garbage problem was criticized as racist when it actually could have brought attention to a serious environmental issue affecting the island. The Oct. 30th 2024 article in the Environmental Blog, https://www.theenvironmentalblog.org/2024/10/puerto-rico-trash-problem, begins:

"Puerto Rico is grappling with a pressing trash problem that’s not just a visual blight, but a serious threat to its stunning landscapes, marine life, and the environment. The urgency of this crisis is underscored by its far-reaching impacts on health, tourism, and the economy."

The clip circulated without context, omitting any nuance or understanding of the larger problem. A misplaced outcry ensued, stifling the possibility of honest conversation about Puerto Rico’s situation, in favor of polarizing narratives. A classic case of "context tampering."

A particularly egregious case of context tampering is on display in the viral Kamala Harris endorsement video by famous basketball player, LeBron James, which mixed "out of context" statements by Trump with civil rights footage, creating a misleading narrative that went well beyond simple opinion. Interestingly, James' video opens with Hinchecliffe's joke: https://www.youtube.com/watch?v=WDwTZzdN7HU.

By editing Trump’s "out-of-context" audio clips into a misleading and placing over footage designed to evoke strong emotions, the video essentially created a false portrayal, tampering with context to shape public perception rather than encourage informed debate. This video epitomizes the risks of unverified, highly edited content circulating widely without tools to verify its authenticity.

We agree wholeheartedly with your point that these issues call for fresh approaches, but we believe that only a broader, infrastructure-based approach can consistently counteract context tampering and other forms of misinformation at scale. Moving beyond individual platforms into a shared space—what we call the space above the webpage—offers a unique opportunity to make informed, context-rich information available directly to users as they navigate digital environments. In this model, context could be verified, and statements traced back to their origins, giving people the tools to assess content on its true merit.

Adding another silo, however community-oriented, may only perpetuate the fragmented, sometimes misleading flow of information that currently exists. Our approach is focused on empowering people to engage with the full context of information wherever they encounter it, using a transparent and interconnected layer over existing platforms that fosters authentic discourse and allows for real-time validation. With systemic context integrity measures, we can foster conscious, informed choices at the level of digital infrastructure itself.

Your article was inspiring, and we hope our perspective contributes to this essential dialogue on shaping a more responsible, democratic digital world. We’d love to explore ways we can work together to build solutions that operate above the limitations of traditional platforms and bring integrity back to the information ecosystem.

Thank you again for pushing this vital conversation forward. We look forward to continuing it.