#21: Beneficial Tech — The Times They Are a Changin'

Welcome to Civic Signals, where ethics meets tech.

This week: A conversation about how to “have the talk” with your product and engineering teams with Omidyar Network’s Director of Beneficial Tech, Sarah Drinkwater, whose experiences in the tech world span building products to running Campus London, Google's first physical startup hub.

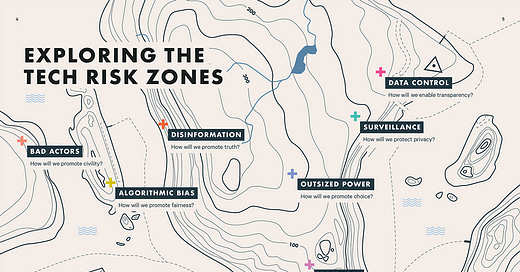

Find out about the bottom-up theory of change that informed the creation of the Ethical Explorer Pack. And it may be hard to imagine now, but tech leaders caring deeply about responsible tech might just be the new status quo in the not-so-distant future.

So grab your pack, go for a walk and start plotting how to initiate change within your own teams. But first: our conversation with Sarah, edited for length and clarity:

The framing of “explorers” is an interesting one. Tell me a bit more about that and what it means about what you felt was needed in the tech space to push some of these ideas forward.

So the Explorer archetype really came from user feedback. It came from us talking to people who were leading this work — whether leading it through doing better design or by asking questions and being that pain in the ass on the team whose hand is always in the air. And they kind of said to us, you know, we feel like isolated pioneers. There's no playbook for this work.

We really wanted to kind of build them up. I think the conventional tech narrative is changing, but it's not changing fast enough.

So when you look at the kit, things that we added in, after a lot of user feedback, were things like blunt language to get buy-in. Language to push back on all those people you work with who don't think this matters, particularly because a lot of this work is led by people who are underrepresented in tech. It was really important to us to give them the armor they need to do what they're doing already.

Language is more important than we think. EthicalOS was our predecessor product that came out in 2018. We're proud of that, but the name didn't feel right. It was an 80-slide deck. It felt very clunky. So we were like, okay, how do you make this really digestible, really accessible?

And lastly, it was kind of important to us that we had it as both a sort of digital toolkit, but also a physical one, because so much of our lives is mediated through screens, particularly right now. I've got a couple of walking dates this week that I'm really excited by. So if people can take that time offline, I think a lot of things come up in the same way that great ideas happen: in the shower, or on a walk. I don't have my best ideas just staring at my screen. It doesn't work like that.

I also really like how the cards are formatted, where you first have companies check in on where they currently stand in relation to each risk zone, then move them into “anticipating risk” type thinking — but also, how they could lead the way, and turn something that is most often framed as a critique which a) is totally fair but b) also causes companies to recoil and take a defensive stance, into a posture of leadership where caring about this stuff can also become a kind of competitive advantage.

Well, one of the reasons why I was so keen to work on this problem personally, is that I've built things in real life. I know it's really hard. And I think “techlash” has been a really powerful, positive tailwind to our work as a whole. That said, if you look at the last five years, a lot of it has been fueled by academics and thinkers, the people who are outside of commercial tech. It makes it really easy for tech to push back and be like, you don't understand us, whereas our theory and the reason why we've elected to focus on inside-change is that the next wave of great products and great tech leaders will be people who care passionately about this lens.

I’m really proud that we got the power card in there; we really wanted to have some kind of a conversation in this kit around business models because ultimately everything else you do rests upon the business model. If your investors want you to do X to make more money, you’ve got to do X and, you know, monopolies are not always a bad thing, but they very often aren’t a good thing. And certainly in the U.S. we think bigger is better, right? It's a real problem around scale.

When we think about power, it's really about choice and about control in a positive way. We know a lot more now than we did 10 years ago. When I was last raising money, I didn't know how long I would be in that relationship. I didn't know that we'd be forced to sell the company before we felt ready because we hadn't made enough money. So if I was starting out now, even someone like me with no background and connections in tech, starting a company for the first time, there’s a lot more information to access that would tell me, don't take money from these people. So I think that's something around the notion of choice that's kind of cool and empowering, particularly for any founder starting now, because — the alternative capital landscape, the Zebras Unite movement — there are all these alternative ways of being that I think really resonate with people outside of Silicon Valley.

This is an inflection point. Are we going to see the next, best tech coming from Silicon Valley? No way, not possible. I think it’s just complete groupthink.

I think it goes back to some of the questions in the guide, where you're trying to create the sense of being situated in the world.

I had made the assumption that globalization would continue to increase, but I think Covid-19 is proving that I'm wrong and that actually things are going to be a lot more national than I perhaps would have thought. But we're in this weird situation where all of us want to feel connected to where we are and to who we are. And around us, at the same time, as the products that are serving us, that tell us that they're giving us that connection, are these super weird, borderless, floating-in-the-clouds mystery boxes of companies. Amazon has offices, I guess, but these companies aren't “of places” really anymore.

Meanwhile, there are some real down-to-earth consequences and implications of things like “in the cloud”, but they're abstracted.

Clouds seem fluffy, part of the weather, benign, part of the landscape. When you think about Amazon warehouse workers and some of the awful stories that we've heard about how they're treated, it's incredibly hard to connect the words that we use to talk about these kinds of companies with the realities. It becomes really overwhelming in a way. I think why we focus so much in the work that I do around funding signs of hope, like signs of people caring, is that sometimes I worry, both as a citizen and a user of these products, but also as a maker of these products — it's a little bit like climate change. Sometimes I get so overwhelmed and it makes me unable to act. So part of the goal of the kit was like, okay, how do we find a way into this? How do we try and get past that feeling of stuckness that I keep hearing from people I know who work at larger companies? They're like, I've got my objectives for the quarter: it’s to do this. Anything else I do is a bonus and also distraction.

And I think there's something really important about how do you at once raise awareness of the harms, but also communicate what's possible.

Particularly if you look at young people leaving university now, we know they care. It's a matter of how much choice they will have in the economic environment we’re in, particularly in the U.S., where college fees are so crazy.

There’s something about how the whole structure is setup that needs to be critiqued but the challenge is it’s so painful to critique it because it goes back to our sense of self. And I think it's like when you're critiquing tech with tech people. You're basically saying they’ve done something wrong— it's a little bit like the structural racism conversations that are happening: it's painful talking about this because most of us want to do better but it’s still a painful process you have to go through to work out how to do better.

I was going to ask you about your theory of change, which you’ve sort of addressed indirectly.

Yeah, I guess the only thing I didn't probably highlight enough — and I can share a medium post that I've written on this — is that we just believe now the change will come from the workers, whether pushing back, whether speaking up, whether doing better. And I think that's a real evolution in our thinking to be honest. And I wish more funders would join us on that journey.

I think the funding world is still reckoning with our own power and privilege. And we need to get better at that. We need to get better at giving our power away and centering workers and groups, not individuals.

What brings you hope that this will change? Where should we be looking? Where are those little seeds of the future hiding amidst us that we just don’t see yet?

I think there's a lot to be optimistic about, but then that's my nature.

If you look at reputational risk in massive companies, there hasn't really been any change in the last 10 years. What has made a difference is a lot of the worker organizing. I think the recession that we're going into is going to make a huge difference in terms of companies that we value. And it's also hopefully gonna nudge governments into doing a lot more because the moment in time is here. One thing that I'm very optimistic about is young people who are graduating now, and this is where I worry about responsibility becoming a privilege and economic realities and all that.

We've been funding this thing called Responsible CS for the last couple of years. And it's basically a program to bake ethics into undergraduate computer science education at 17 different universities. I realized that computer science graduates, they just do hours of code, that's all they do. There are never any components that speak about power. These things I think are very important. The students that are graduating from that course this year, obviously are graduating at a time of incredible change. I feel for them, it's really hard. At the same time, a lot of them feel more motivated and activated by the pandemic, by the fight for racial justice, than they were beforehand.

Whether it's the trend around cooperative governance structures, whether it's the trend around mutual aid networks — which I'm kind of excited by — I think all these are seeds of excitement. But they don't look like the things we thought of as promising five years ago. Often in technology when we think about trends we think of them in crude, old fashion ways: so and so raised all this money! These are old signs and we have to evolve past them. Whether it's the fact that there are now accelerators that exist purely for co-op companies to get started, but help share best practices... I love that.

I think particularly the activism of the youngest generation, like Extinction Rebellion, Sunrise Movement — I'm just incredibly excited by that. And I think part of the job of the older generation is to get out of the way, frankly.

-

🧰 Download the Ethical Explorer Pack.

📊 This civic tech sheet (h/t Steve Messer) has a list of sessions, frameworks and other useful tools for considering privacy and ethics in tech innovation. What would you add to it? Drop your favorite resources in the comments and we’ll highlight them next week!

As always, if you like what you read, follow us on Twitter, Instagram and, newly, LinkedIn.

Thanks for being part of this community,

The CS Team

Images courtesy of Ethical Explorer and the Omidyar Network, which also helps fund Civic Signals.

Civic Signals is a partnership between the Center for Media Engagement at the University of Texas, Austin and the National Conference on Citizenship, and was incubated by New America. Please share this newsletter with your friends!