#15: Paint The Town

What can street murals tell us about content moderation and systemic change?

Welcome to Civic Signals, the newsletter where streets and tweets meet.

This week: From hashtags to murals — when symbols translate into real life action, and when they don’t. Can an evocative plaza be both an empty performative gesture and good public art? Does content moderation actually reckon with tech’s systemic problems? Finally, Twitter tries on subtlety, and it comes from a proud tradition of nudging.

Keywords: Network effect

n. A factor in economics and business where a good or service increases in value according to the number of other people using it.

Technologies like the telephone, the fax machine, or social media networks have to grow a user base to accomplish a network effect, where each additional person who uses a product makes it more useful to existing users. Services have to reach a “critical mass” of users to realize their potential value. Based on quantity rather than quality, adding extra users eventually slows a system, whether that’s getting a busy signal for a phone or feeling content overload on a social media site.

What’s clicking

Online

“This you?” is Twitter’s new meme. Here’s what it’s all about. - New York Times

Why Facebook staffers won’t quit over Trump’s posts- The Atlantic

A news organization isn’t a public square any more than Twitter or Facebook is -New York Times

Offline

“Safe streets” are not safe for Black lives- CityLab

Let’s meet on the porch - New York Times

The future of Seattle’s Capitol Hill Autonomous Zone is predictable - The Stranger

Linked

What the protests look like on Twitter versus cable news - Slate

Inside Nextdoor’s “Karen problem” - The Verge

America is changing, and so is the media - Vox

This week’s Double Click:

Racial justice requires algorithmic justice, by Joy Buolamwini, Medium

As IBM and Amazon stop using facial recognition, more should follow.(h/t to Sasha Costanza-Chock)

Copy + Paste

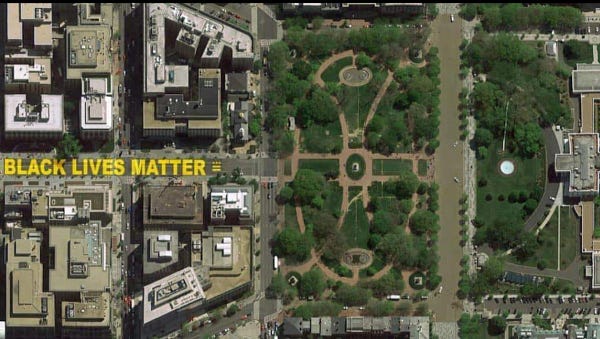

By now you’ve probably heard that Washington, D.C. has a new street mural right across from the White House that reads “Black Lives Matter” spanning two blocks of pavement. Painted by local artists and the city’s Department of Public Works, the mural drew the line in a turf battle between D.C. Mayor Muriel Bowser and President Trump after federal police scattered protesters in Lafayette Park for a photo-op in front of St. John’s Church. But the move has also given rise to a new meme of displaying anti-racism messages on streets across the country.

Almost immediately, movement organizers criticized the mural as performative gesture, waving away Mayor Bowser’s actual positions about addressing systemic racism with the police. Ashley Harris, an organizer and writer, described the D.C. mural as a “vanity project” that doesn’t commit to actual policy reform. “Without action, the mural is window dressing,” writes design critic Kyle Chayka in the New Yorker. But Chayka argues that minimalist all-caps yellow-paint does achieve one goal of public art: eliciting a response from its audience.

By outlining a stretch of public space and making it more welcoming, the Sixteenth Street mural invites interaction: with its letters, its message, and other people. In that way, it’s the opposite of another recent monochrome phenomenon, the black squares that took over Instagram last Tuesday, as users attempted to demonstrate solidarity with the protests.

Chayka describes how public art can invite making new art: First, the movement organizers themselves remixed the city’s initial work, adding “Defund the Police” to the city-sanctioned mural. Now the broad street letters have been remixed in Raleigh, Oakland, Louisville, Charlotte, and Seattle, multiplying like the protests themselves.

But these displays of solidarity don’t always grasp the movement’s message: New York City mayor Bill de Blasio quickly co-opted the idea for the city’s boroughs as he faces criticism for ignoring the NYPD’s handling of the protests. In Philadelphia, police helped paint an “End Racism Now” mural, which residents saw as a PR stunt that amplified “empty promises” rather than reckoning with police actions against protesters the week before, according to the Philadelphia Inquirer.

The debate over surface level support vs. systemic changes on city streets has played out online too. As platforms start to address the issue of content moderation, combatting overt racism on social media doesn’t reckon with the platform’s “neutral” algorithms. But whether it’s a government embracing a street mural slogan or social media adopting new content moderation policies, both gestures risk failing to translate a critical mass demands into structural reform: What makes messages easy to adopt makes it difficult to keep those power from co-opting their meaning and use.

Speaking with Sarah Drinkwater of the Omidyar Network earlier this week, Safiye Umoja Noble, the author of Algorithms of Oppression, argued this past week that reforming tech platforms has to go to deeper than content moderation to address the structural problems facing social media. Here’s an excerpt of Noble’s comments from the virtual CogX Global Leadership Summit and Festival of AI and Breakthroughs Technology, emphasis added:

We’re living in a moment where all over the world where there’s an outpouring and an outcry for Black Lives Matter, for George Floyd, Breonna Taylor, for Ahmaud Arbery, we see that all over the world people are using technology to connect and protest and to bolster the calls for police accountability for those who murder black people and also to defund the police.

Many people around the world are organizing using this technology, but we have to ask ourselves what has become of the way in which hate groups are able to easily organize online, propagate the kind of disinformation that has led to the incredible dehumanization, or at least is deeply implicated in the dehumanization, of the most vulnerable people in our societies around the world.When I think about “what does designing technology for good” look like, that means we center the people who are the most harmed. I think one of the naivetés we see coming out of Silicon corridors around the world is a sensibility of a very esoteric philosophical framework of good. I’ve watched for a decade, as colleagues who are women, feminists, women of color, journalists, scholars who have been talking about the harms of various types of digital technologies on the internet for at least 30 years and concentrated over the last decade.

Those criticisms and those arguments about the harms, the good and the bad, we see those arguments being taken up now and put into frameworks of ethics that de-fang and de-politicize the very things we were talking about: To what degree are these platforms implicated in the undermining, eroding, and sometimes the collapsing of democracy? To what degree are they implicated in real harms? I’m not talking about adjudicating a meme, I’m talking about becoming the vessels and the microphones and the amplifiers at a global scale for calls to hate and harm.

Later in the discussion, Noble continued to explain why looking at content, even political content, cannot reckon with structural questions posed to these companies:

The unit of analysis of talking about what’s happening through these platforms and technologies treat it as if they’re matters of content. The word “content” flattens what we’re talking about here. … [Content moderators] are in fact making executive decisions of a life-or-death matter. In the case of the murder of Philando Castile, would we know about a product called Facebook Live had Diamond Reynolds [Castile’s girlfriend] not captured his murder and broadcast it? Every day moderators are looking at content of this nature that leaves people’s lives hanging in the balance. … The question sometimes should be whether these products and platforms should even exist.

Online and offline, if we’re just judging our impressions of particular content, we may be missing the proverbial forest for the trees: The bigger question is how these systems fail the most vulnerable people among us before they even reach the design stage.

Beta test: Twitter’s new nudge

This week, Twitter unveiled a new feature that it will try to nudge users into trying a wildly novel idea: Reading the article before you retweet it.

According to BuzzFeed News, Android users who haven’t clicked a link in the app will get a prompt asking them if they would like to read the article first. The company says its aim is to “promote informed discussion,” instead of letting stories spread without readers knowing the further context. While the feature won’t totally prevent the retweeting of unclicked links, it will nudge users with reminders such as “Headlines don’t tell the full story.”

It all may feel a bit like the “Be Kind, Please Rewind” reminders that VHS tapes used to have in video stores. But there’s science behind it. The behavioral economics concept behind these ideas is known as “nudge theory,” as popularized by the book about the concept by Richard Thaler and Cass Sunstein.

By creating “choice architecture” that lets people opt in or out of decisions, policy can lightly encourage people into good habits without bans or coercion. Some examples in cities include putting small fees on plastic grocery bags, or speed limit signs that show drivers how fast they’re driving or asking people to be organ donors on their license. Good nudges can even be a bit more game-like such as piano stairs to encourage walking or a barista’s tip jar poll or a ballot bin for cigarette butts.

Once you know what a nudge is, you start to see them everywhere: Have a favorite online or urban nudge? Send us a note at civic.signals@gmail.com or @ us on Twitter.

Stay grounded,

Andrew Small

Illustrations by Josh Kramer

Civic Signals is a partnership between the Center for Media Engagement at the University of Texas, Austin and the National Conference on Citizenship, and was incubated by New America. Please share this newsletter with your friends!