🤷🏻♂️ What Musk needs to know

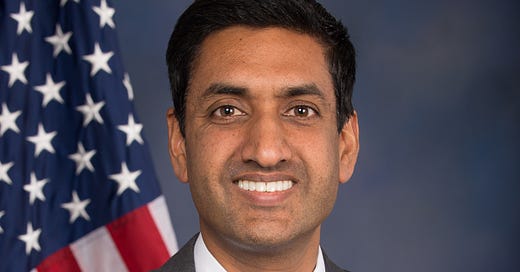

Rep. Ro Khanna on responsibility and Integrity Institute fellows on deplatforming

This week the internet continued to reel in response to the news about Elon Musk attempting to buy Twitter. To take us beyond the viral echo chamber with a nuanced response on Musk and Twitter, we have a special preview of our upcoming magazine issue on Trust: an excerpt of the interview between New_ Public Co-director Eli Pariser and Congressman Ro Khanna, who represents Silicon Valley.

Also, we turn again to our friends at the Integrity Institute, who we profiled recently in the newsletter. In particular, this week integrity (or trust and safety) workers at Twitter were left wondering how their role in making the platform safer fits in with their incoming boss’ questionable vision of “free speech.”

Musk’s aggressive tweeting this week has resulted in some employees being targeted with racist abuse and doxxing. Casey Newton writes, “At best, all of this has been a distraction for the work Twitter was doing before they were derailed by the acquisition. At worst, it’s leaving some employees fearful for their lives.”

We also stand with these workers, and in that spirit, this week we’re ceding the spotlight over to two Integrity Institute fellows for a mini-debate on social media deplatforming. This week’s Twitter discourse has been dominated by a lot of uninformed yelling about content moderation and related subjects, and it’s such a pleasure to read their informed, deeply-considered expert opinions on this complex topic.

In Rep. Ro Khanna’s new book, Dignity In A Digital Age: Making Tech Work for All of Us, he explores his vision for the future of the internet, calling for a “multiplicity, a plurality of discursive spaces” online. This week, we have a preview of Eli and Rep. Khana’s interview, talking about Musk and Twitter.

Eli Pariser: Okay, so the digital public sphere is a major area of concern for you. So I have to ask about Elon Musk and what it means that the world's wealthiest person is buying Twitter. What does that say about the prospects for digital dignity?

Rep. Ro Khanna: The very fact that we are so concerned over a change in ownership of Twitter, and the impact that that may have on the digital public sphere, highlights the complete lack of regulatory oversight and the lack of any set of ethical norms that have been established for social media.

When Jeff Bezos bought the Washington Post, there were obviously rumblings — is The Washington Post going to be biased? But no one thought on a practical basis that Jeff Bezos was really going to be making day to day decisions. And that's because there's a regulatory framework governing newspapers and there are strict journalistic norms, and it was made very clear that there was going to be separate corporate governance from editorial decision-making at the Post.

So one question is, is Musk really going to run Twitter day to day or is he going to do a more prudent thing and having Twitter be separate?

But the fact that we're even having to ask that question shows the problem in the social media sphere, right? You would never ask that question if Elon Musk bought The New York Times or The Washington Post. It wouldn't even be a question. You'd have mass resignation if he suddenly said, "I want to make editorial decisions," but you wouldn't probably have mass resignation from Twitter because there is no sense that you need to have independent decision-making and that there's a responsibility to the public sphere and that this can't just be profit maximizing. There's no separate sphere that's divorced from profit maximization in the social media space like there are in the newspaper business and in the broadcast business.

Stay tuned for more of the interview – including Khanna’s optimistic thoughts about the need for digital public spaces – later this month!

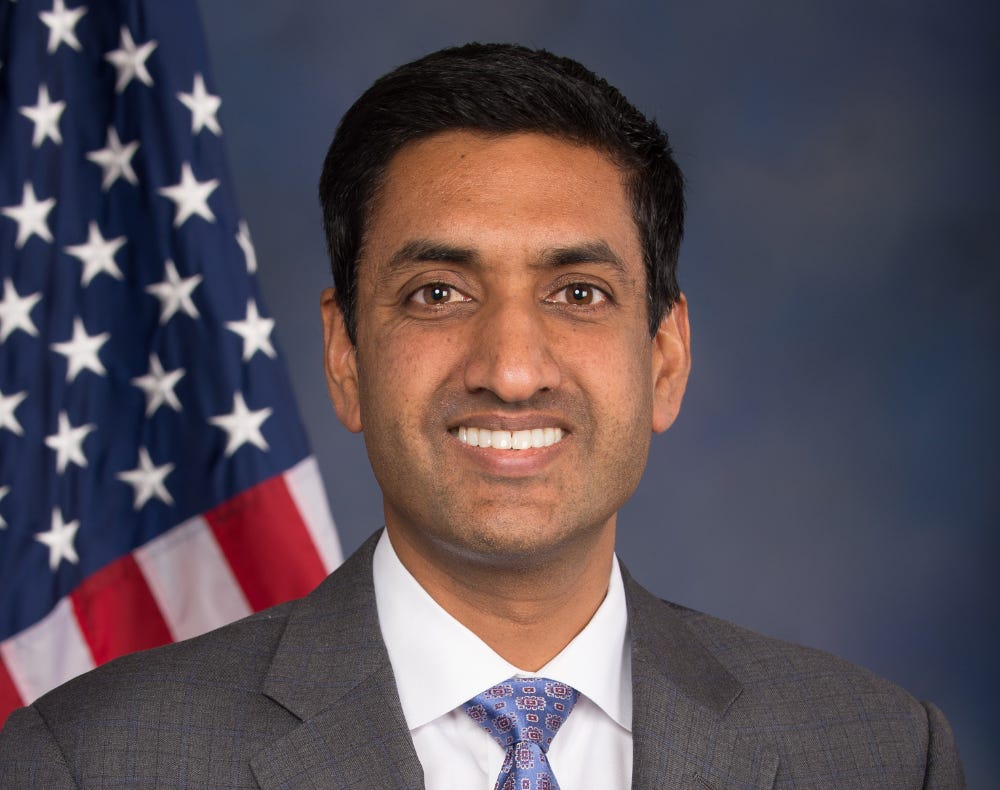

Integrity Institute Fellows take on deplatforming

Key takeaways:

🔨 Deplatforming high-profile users can be less effective due to the Whac-A-Mole problem, where they can just pop up elsewhere

🎙 The reach of users who are causing significant real world harm can be substantially limited through deplatforming

📞 Formal and information channels for communication between platforms and even federal agencies may play an important role in deplatforming

This week there’s been a lot of conversation about what kind of content moderation would be welcome on Elon Musk’s Twitter. Does a Twitter without any “censorship” mean that high-profile deplatformed users — even Donald Trump — are allowed back? Before Musk’s offer, I sat down to chat with two Fellows at the Integrity Institute, Dylan Moses and Sagnik (Shug) Ghosh, to get to some of the core issues around the practice.

Over the last three years Dylan Moses has held roles in content policy and operations at Facebook and YouTube focused on mitigating the risks of online hate speech, terrorism, and misinformation. Shug Ghosh has worked as an engineer lead on integrity at three tech companies, including three years on Facebook's Civic Integrity team. At Facebook, he led efforts to enforce against abusive actors disrupting civic discourse.

There were many points of agreement between the two, but Moses and Ghosh diverged most in how effective they think deplatforming is as a strategy for dealing with high-profile users with a lot of visibility and reach. Experts like these two know what they’re talking about from having worked on these issues for years at some of the largest platforms, and if we’re lucky, Musk will stop angrily tweeting and instead listen up to what they’ve learned.

Defining deplatforming

Josh Kramer: Do you both see the removal of high-profile individuals and large, mass bans of categories of users to fall under the umbrella of deplatforming?

Shug Ghosh: In my mind deplatforming isn't restricted to users with high reach — most deplatforming happens with users at lower reach. Just the highest profile stuff has high reach.

Dylan Moses: My definition of deplatforming I think only covers people with significant enough reach, public figures. It goes beyond just that you violated the terms of service more than three times in 90 days. It's more like your speech and presence on the platform is not just a brand liability but a significant risk to real world harm.

SG: It seems like we can break this up into 3 groups roughly:

1. High-profile figures. Policy teams get very involved here. The contested violations are usually very visible and get a lot of attention.

2. Very dangerous actors. Companies often have dedicated teams focused on these actors to completely remove them from the platform, and work with policy teams to do so (high-profile terrorism, for example).

3. Everyone else. Policy teams usually don't get involved (other than initially to set the guidelines), but most accounts that're taken down on platforms go through these systems.

I think scoping our conversation to public figures would probably be most interesting.

DM: One hundred percent. I think we agree deplatforming is ineffective to a degree, particularly when you have someone who is very adversarial, like Alex Jones. The idea was likely to round up all instances of him appearing on the platform — groups, accounts, users with his likeness — and then remove them. Then, make him persona non grata on the platform with a praise, support and representation ban.

Playing Whac-A-Mole

DM: If the goal is simply to remove the entity, then deplatforming is effective up to the point where the user can just use a VPN and create NewsWars instead of InfoWars like Jones did. If the goal is preventing offline harm from content stemming from your platform, I think that’s more dubious.

I think my point though, is that deplatforming can't be the be-all and end-all, particularly when public policy teams have political concerns to consider. Second, like with Trump, one really has the opportunity to just go to a different platform or create their own. There's less reach, but I'm not sure the capacity for harm decreases.

JK: OK, Shug, if a deplatformed user can just pop up in a different place like in Whac-A-Mole, why deplatform at all?

SG: Short answer: reach. There was an article about how when Trump was banned from Twitter, misinformation decreased 70%+. People can, and do, go to other platforms, but their reach is substantially decreased. The main problem with social media is not the introduction of harmful content — it's that social media allows unprecedented reach for that content.

Even when InfoWars came back as NewsWars, their reach was dramatically reduced. They had 30K fans instead of 1M+.

Coordinating efforts

JK: Dylan, if deplatforming is not a panacea, a perfect solution, do you think there are other solutions that are more effective than deplatforming, either solo or in combination with each other?

DM: I do, but I fear that some of those same efforts might remove Section 230 protections from platforms. So in general platforms do share information about people they deplatform with other platforms. These channels are informal — sometimes director A from Facebook texting director B from YouTube about someone they're about to remove.

I think that type of coordinated activity helps with removing dangerous people from the mainstream platforms. Additionally, platforms have formal channels to service law enforcement requests for criminal activity happening on the platforms, such as GIFCT. But what I think is necessary is more formal government collaboration, including the Department of Homeland Security and the Department of Justice, with the mainstream platforms and some of the smaller ones like Gab, etc., to coordinate/compel platforms to provide information on the most dangerous people.

The problem with that approach though is that platforms collaborating with government 1) just isn't a good look for PR, but 2) would likely make them state actors and limit the amount of moderation they can do.

JK: Section 230 considerations aside, Shug: do you think something along these lines makes sense? Or what about other mechanisms to restrict reach besides deplatforming?

SG: A lot of this does depend on the types of actors. Like government cooperation on Trump, for example, was out of the question for obvious reasons. There's also several state actors that violate different international policies, which can make coordination difficult.

In general I don't think for the high-profile user case, government coordination would be as effective. For terrorist organizations, this is more feasible (and does happen). Platforms often do use other mechanisms to restrict reach short of deplatforming: things like demoting reach of users on newsfeed, or removing users from recommendation systems, or restricting ads.

I'm supportive of having those tools in the toolbox; when and how to use them is situational. Deplatforming should be used with caution, and in typical cases the other tools should be applied first. Those tools are often ineffective for high-profile public figures, as much of their reach comes from the media and people talking about them.

Standards for public figures on social media

SG: I do not think exceptions should be applied to high-profile public figures and that they should be held to a higher standard. When I say higher standard here, I mean in terms of the language used and classification of their posts; a lot of high-profile actors are good at toeing the line of content moderation policies at scale.

DM: +1000. Public figures are held to a higher standard because for better or worse they choose the spotlight. Choosing that spotlight comes with the responsibility to use that voice responsibly. Freedom of speech doesn't imply reach (that last line is from Renee DiResta.)

JK: And yet this never really happens in practice, right?

DM: It's public record at this point that at least at one major platform this happens infrequently. (I think here we're talking about Facebook re: WSJ's Facebook Files.)

SG: Right, well, I think what ends up happening is that the standard on the actual posts themselves is lowered, and that's used to justify the more lenient action. Like, "that's not really misinfo" or "that's not really hate speech," even when the public feels differently and the company's own policies cover those as such.

DM: Yes. Completely agree. Or that it doesn't meet the standard, which is typically a public policy decision. You can even look at the Rogan situation as a more recent example. Spotify has clear policies on not allowing dangerous medical advice (my paraphrasing). But their enforcement action for him in many cases was to put a COVID-19 information label on those podcasts, where the health information about vaccines or the virus itself was dubious at best.

JK: Last question: What's the best way forward in your opinion, not for just one platform, but the whole industry? More innovation in options for throttling down amplification? More agreed-upon standards for deplatforming? Something else entirely?

SG: More transparency on what the lines are for deplatforming, not just the overall numbers, but the higher-profile samples.

DM: Honestly, from my side it's more government (inter)action with platform companies to develop clearer standards for when it should happen and what if any criminal/civil liability the user should face for their content.

Thanks Dylan and Shug!

Community Cork Board

In case you missed it we have three new positions up on our website. Check out the job descriptions for COO, Head of Product, and Head of Community, and apply here.

And for the first time, we’re offering internships! We have open internships in communications, design, operations, and research.

We’re getting serious about building a job board here in the newsletter, and if you’re also motivated by building a better internet, we want to know about your open roles so we can share them in this space. Please email us with “JOBS” in the subject line at hello@newpublic.org.

Reminder: we have an Open Thread on Tuesday, May 3, at 12pm ET/ 9am PT. Look for the email in your inbox inviting you to the conversation.

Finally, we’re making the rounds talking about social media. Deepti was on the podcast Reimagining the Internet, and Eli was on The Next Big Idea.

Standing with the workers who do the content moderating,

Josh

Photos courtesy of Dylan Moses, Shug Ghosh, Rep. Khanna. Design and illustration by Josh Kramer.

New_ Public is a partnership between the Center for Media Engagement at the University of Texas, Austin, and the National Conference on Citizenship, and was incubated by New America.