🏆 What the Oscar race can teach us about AI.

The power of predictions in our most passionate communities.

Before we begin: our latest research project, Community by Design, is live on our website and open for your contributions! Plant a seed for the future of digital public spaces by submitting a digital space, feature, or practice.

Act One: a wide shot on our Prediction Era.

“The first time I didn't feel it, but this time I feel it. And I can't deny the fact that you like me. Right now, you like me!”

—Sally Field for Places in the Heart, 1985

Oscar stans love to predict the outcomes.

There’s a saying that, contrary to popular belief, LA really does have four seasons: earthquake season, fire season, pilot season, and—my personal favorite—awards season.

My first proper LA awards season was when I was a freshman in college, when Moulin Rouge! came out that spring. I think I watched Nicole Kidman come down on that swing at least 30 times that summer.

I wanted the very best for that film, and that meant one thing and one thing only: an Academy Award nomination. So I started tracking its for your consideration (FYC) campaign: ads in the trades, radio interviews, magazine articles, you name it. It introduced me to the concept of precursors and the full awards circuit, and it was an absolute thrill to root for it in this race.

But as I learned more about all the filmmakers that year and watched and appreciated more of their work, my passion for the Oscar race grew well beyond Moulin Rouge! into a full obsession with the prediction process in every category.

I still consider myself to be an awards stan, though I don’t watch nearly as many new releases as I used to. Why do I, and so many other stans, continue to follow this race year after year? In the words of Michael Shuman in his newly released Oscar Wars:

“Art isn’t meant to be ranked like a sports team or scored like a tennis match, but human nature drives us towards gamesmanship: we like watching people win or lose, and if given the chance, we want to win.”

The Oscars are next Sunday, which means we’re nearly at the finish line for awards season. But the predictions on everyone’s lips have nothing at all to do with this year’s Oscar race.

In fact, the predictions that are currently dominating culture are the ones found in generative AI. This latest wave of new imaging and new writing are forming a new legion of prediction fanatics, and I think Oscar fandom can offer a unique perspective on AI’s ascent.

The joy of playing predictably random games, now with AI.

Our predictive AI journey starts with a roll of the dice. Ancient Greeks and Romans were very familiar with games played with prototypical dice, or astragals, but the concept of probability emerged in the 16th century when Girolamo Cardano, a Renaissance man and an avid gambler, wrote equations for dice game analysis in The Book of Games of Chance. These equations led the foundation for modern computing, including artificial intelligence.

If we’ve been playing probability games since the classical antiquity, why did it take so long for probability statistics to arrive? According to Steven Johnson in his book Wonderland: How Play Made the Modern World, it was a matter of mechanical standardization:

“Seeing the patterns behind the game of chance required random generators that were predictable in their randomness. The unpredictable nature of the physical object made it harder to perceive the underlying patterns of probability. When dice had become standardized in their design, it made the patterns of the dice games visible, which enabled Cardano, [Blaise] Pascal, and [Pierre de] Fermat to begin to think systematically about probability.”

We are now experiencing a quantum leap in “predictable randomness” with this latest wave of AI tools, trained on an Internet filled with standardized formats of images and text, that generate seemingly unlimited uncanny guesses to answer a rather provocative question: “What would a human do?”

If our fan communities are any indication—entertainment, sports, fashion—what a human would do is play a game with rules for engagement. More from Steven Johnson’s Wonderland:

“Playing rule-governed games is one of those rare properties of human behavior that seems to belong to us alone as a species. So many other forms of human interaction and cognition have analogs in other creatures: song, architecture, war, language, love, family. Rule-governed game play is both ancient and uniquely human. It is also one of the few activities—beyond the essentials like eating, sleeping, and talking—that three-year-olds and ninety-three-year-olds will all happily embrace.”

For these reasons, humans were destined to fall deeply in love with AI’s sophisticated gameplay, or at least certain applications of it. But for many, AI is far from lovely. The thrill of newness is outweighed by its mistakes and its high potential for human harm.

To keep things within the language of games and probability, I think it’s helpful to frame the societal tension around AI as a matter of stakes: how the low stakes of current tools draw us in, and how high stakes for the future of AI freak us out.

Act Two: a split-screen on AI’s high and low stakes.

“God bless that potential that we all have for making anything possible if we think we deserve it. I deserve this. Thank you.”

—Shirley MacLaine for Terms of Endearment, 1984

The AI starter pack is a low-stakes confidence boost.

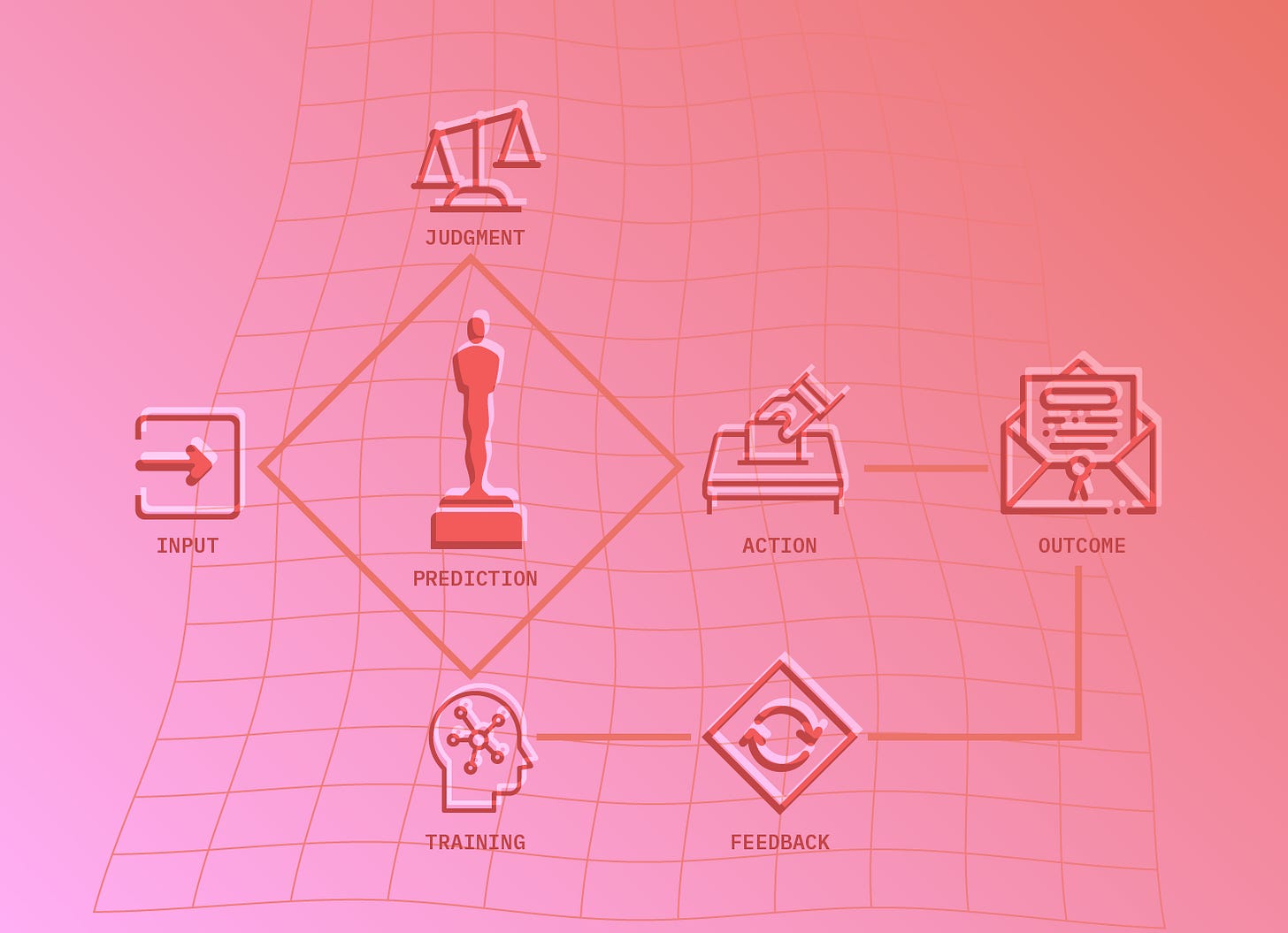

To better understand the role of stakes in AI, I defer to the wisdom of Ajay Agrawal, Joshua Gans, and Avi Goldfarb. They had this to say in their book, Prediction Machines:

“While judgment tells you what the value of different possibilities is overall, stakes focus on one particular aspect of judgment—the relative consequences of errors. Thus, in deploying AI, you want judgment to come from the right person, [and] the choice about whether to fully automate a decision or not on the basis of AI prediction relies on how measurable the stakes can be.”

My Oscar predictions are a low-stakes exercise. When I fill out my ballot based on the training data in my brain and input data from social media and television, my judgments and decisions are pleasurable because there’s no cost for my mistakes. In fact, surprise outcomes often prove delightful. (Olivia Colman, I’m looking at you!)

Our current relationship to most AI tools is similarly low-stakes. The Bold Glamour filter blazes its way through everyone’s FYP on TikTok and ChatGPT finds its way into classrooms across the country because they make predictions cheap—literally free—so we can do a lot more of it. When these tools get a prediction “wrong” by producing a subjectively unappealing or objectively inaccurate result, the costs of the mistake are generally low, at least for now.

What is the appeal, then, of AI tools that can make images and write for people who are already trained creators? A recent study explored the ways our brains process the novelty and/or surprise we’re experiencing now with generative AI. The research points to the possibility of a “novelty bonus” of dopamine that could explain why we seek new and playful experiences.

Another compelling motivation for low-stakes AI interaction among the creative class is the concept of vanity. Creative technologist Ari Melenciano reflected on this desire in our Digital Playground event last week as it related to her work with tools like Lensa, Stable Diffusion, and Midjourney:

“I think this level of vanity is actually what kickstarted the popularity of personalized models through AI image generation. I was able to see myself in ways that I had never seen before. It impacted me a lot in my psyche of just understanding identity is really this performative aspect of life… When you free yourself from that, what does that do?”

Raising the stakes for AI is a delicate challenge.

While I deeply enjoy following the Oscar race every year, I’m no gambler. I wouldn’t put anything high-stakes on the line when predicting the results. New precursors might expand the data set and reduce my uncertainty, but there are still so many unknown variables that would make me highly prone to error. (Green Book, for example. I definitely did not predict that win!)

Back to Agrawal, Gans, and Goldfarb’s Prediction Machines:

“Two AIs… can be equally effective in terms of their error rates, but because of the stakes involved, they will be deployed and relied on in low-stakes environments but require substantial additional human resources in high-stakes ones. When AI predictions are faster and cheaper but not better than human ones, AI adopters need to be careful. When the stakes are high, then further care is needed.”

The assertion that AI can handle higher-stakes predictions and decision-making is what Princeton professor Arvind Narayanan refers to as “AI snake oil.” For Narayanan, using AI technology to predict social outcomes like job success and at-risk kids is harmful, misleading, and no more effective than our statistical standbys: regression and counting by hand.

As companies evaluate the role of AI in highly consequential aspects of our lives, like how cars drive themselves, or how talent is hired and fired, or how court decisions are made, the authors of Prediction Machines point to an approach that includes humans in higher-stakes decisions built on AI predictions, to exercise the level of human care and judgment that our current AI tools are not programmed for.

Act Three: a close-up on digital public spaces.

“There's one place that all the people with the greatest potential are gathered. One place. And that's the graveyard. And I say, exhume those bodies. Exhume those stories.”

—Viola Davis for Fences, 2017

Indie feature films, meet indie AI generators.

Even though it seems like the whole world is collectively embracing AI at the same time, the experience of interacting with these tools is largely a solo affair in separate instances of our respective browsers. The communities that form around AI’s outputs are typically a social complement for sharing individual outputs in real time, like the Discord server for Midjourney.

What does AI mean for our shared digital spaces? Aside from functioning as a fiery spectacle for our communal enjoyment, the answer may lie with the data sets that fuel our predictions.

What makes these tools so compelling is the unfathomable global scope of their data and accompanying processing power, iterating and hallucinating at speeds no human could ever hope to match. But there’s a cost: communities or experiences that are not as frequently represented in the original set often find themselves excluded from predictions.

Instead of modeling the entire world, I think there’s potential for communities to build their own localized models with their own data, either new or existing, rather than drinking from the global firehose. Refik Anadol’s generative art practice is one example of sustainable data capture that communities can follow to perform unique, effective, and ethical predictive work with AI.

The Oscar race provides another potentially helpful insight: the importance of community organizing. In Oscar Wars, Michael Shulman reminds us that the Academy formed nearly a century ago in response to the movie industry’s negative public reputation, the external pressure of government censorship, and the internal pressure of unionization.

The Academy settled at least one early conflict, extracting itself from “responsibility in economic and controversial subjects” like the formation of trade unions, which led to the growth of the guilds we know today, replete with their own statuetted celebrations that now serve as Oscar preliminaries. As F. Murray Abraham memorably exclaimed at this year’s SAG Awards, “Union forever!”

If localities are compelled to build their own community data sets for use in AI predictions and subsequent decision-making, perhaps cooperative governance models, like the ones we see in talent unions or professional associations like the Academy, could prove useful in exercising equitable and sound judgment in the application of AI tools and their predictive outputs. They could even lead toward the real-time collective generation of those predictions, something that would truly break the siloed requests that currently define AI tools.

With all the emphasis on writing and photography in AI, maybe we’re all in the movie business? Localizing the data and unionizing its governance could present an opportunity to tell more unique stories through the commissioning, funding, and production of smaller predictive models. An “indie” AI industry, if you will.

Betting on the unpredictable.

Meanwhile, in this year’s Oscar race, with most of the precursors decided in its favor, it feels like Everything Everywhere All at Once has the Best Picture race all sewn up. For a movie that compels us with the power of unpredictability, it’s an ironically predictable position!

The film’s Stochastic Path Algorithm prompts the Wong family to defy expectations in wacky and bizarre ways, like self-inflicting paper cuts or eating chapstick, in order to “‘verse jump” to their counterparts in alternate realities.

Ultimately, the cast finds fulfillment and catharsis by choosing to stay present in their current existence—a comparatively mundane and painful universe—to spend “a few specks of time” with the ones they love.

As we process our own relationship to AI, let’s take EEAAO’s message to heart. Joyful randomness may be humanity’s best strategy for adapting and responding to the seemingly boundless infinity of artificial intelligence. And perhaps the most random and joyful thing we can do is to stay present with the ones we love, to build a better universe with them and for them.

Maybe it’s a longshot. But I’ll take those odds. 🌳

Afterword

Much love to The Film Experience, the blog that I found all the way back in the early 2000s that loved Moulin Rouge! even more than I did, and introduced me to the wonderful world of Oscar prognostications.

And if the Oscar acceptance speech quotes throughout had you feeling nostalgic for ceremonies past, I recommend a stroll through the official database.

Putting everything on a bagel,

Paul