🐾 A social network taxonomy.

Ethan Zuckerman reveals a new classification system for social networks.

True stan confession time: I am a huge fan of Ethan Zuckerman and his work. His piece on social media logics from two years ago was my first introduction to New_ Public, actually!

Last month, Ethan got together with our co-directors to construct a new matrix for the ownership and ruling of today’s social networks. Very grateful to Ethan for his clarity and leadership. Enjoy, citizens. —PM

Our first-ever Digital Playground with All Tech Is Human is this Thursday, February 23 at 5pm EST / 2pm PST.

We’ll be joined by our friend Ari Melenciano to explore the possibilities of AI.

See you in the sandbox. 🤸

“On those remote pages it is written that animals are divided into (a) those that belong to the Emperor, (b) embalmed ones, (c) those that are trained, (d) suckling pigs, (e) mermaids, (f) fabulous ones, (g) stray dogs, (h) those that are included in this classification, (i) those that tremble as if they were mad, (j) innumerable ones, (k) those drawn with a very fine camel’s hair brush, (I) others, (m) those that have just broken a flower vase, (n) those that resemble flies from a distance.”

—Jose Luis Borges, “The Analytical Language of John Wilkins”

The Classification of Social Networks

Procedural justice and the axes of governance

All taxonomies are, at least in part, arbitrary and incomplete. But taxonomies can be useful and they make it easier to talk about complex subjects. At a moment where there’s public debate about leaving Twitter for Mastodon, it’s worth spending time talking about ways in which the structure and governance of social networks can differ.

This post is inspired by a conversation I had with New_ Public’s Eli Pariser and Deepti Doshi and with Tracey Meares and Tom Tyler from Yale’s Justice Collaboratory. We were discussing helping partners in the nonprofit and social change communities understand how to think about the rapidly shifting social network space.

Is it more important to reform existing social networks or to work to build new ones? This is a question that’s lots easier to answer when you accept that there are LOTS of different types of social networks, an idea Chand Rajendra-Nicolucci and I took on in our Illustrated Field Guide to Social Media.

We can divide social networks many different ways—text, image or video-based, for-profit versus non-profit, owned by billionaires or not (“those that belong to the Emperor”).

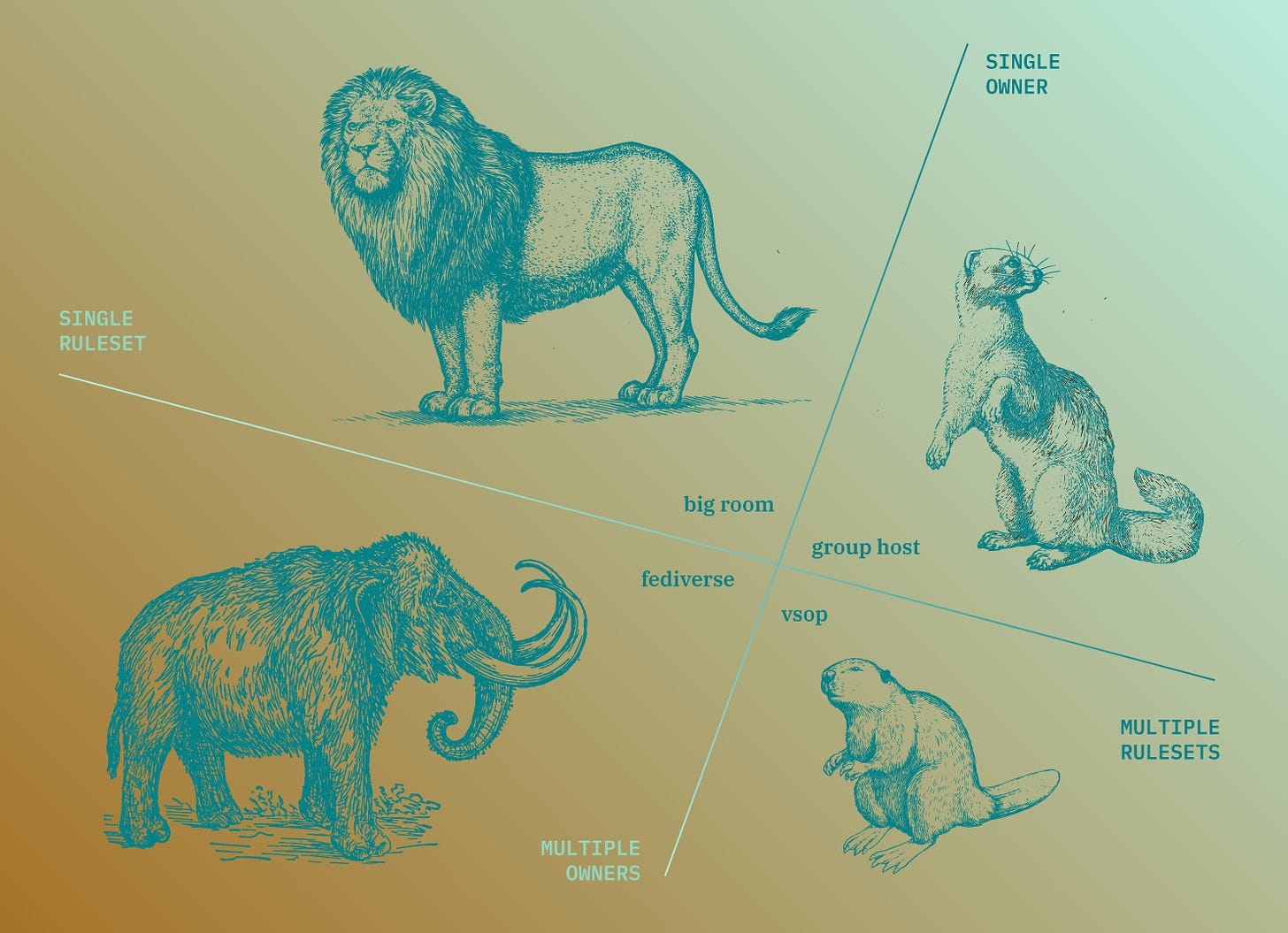

The taxonomy we jointly propose focuses on governance: who has the power to make decisions about what’s allowed on a network, and what are the consequences for breaking those rules.

It’s helpful to know that Meares and Tyler are leading experts in procedural justice, which seeks to make the criminal justice system more fair by treating participants with dignity and respect, giving participants voice, and ensuring decisions are trustworthy, neutral and transparent.

Meares and Tyler believe the same principles that can make the world of criminal justice fairer can work for the governance of social networks. How we might implement procedural justice online depends on how networks are governed, and these models of governance vary widely.

Considering two axes of governance—who owns a platform, and whether a platform behaves as a singular space, or one that contains multiple rooms, each with its own rules and norms—offers four common models for social network governance.

The Big Room

Centrally owned, single set of rules

Let’s start with what most people think of when they consider social networks: Facebook and Twitter. We’re going to call these “big room” social networks, because a salient characteristic is that they treat online conversation as if it happened in a single, massive online space.

Everyone using Twitter is on the same server, capable (with notable exceptions) of seeing what the other person said, and capable of addressing anyone else on the server. I can respond to tweets from Elon Musk or Taylor Swift, and in theory, they might respond in turn to me.

There are some enormous advantages to “big room” social networks. They allow ideas to reach massive audiences, which has been critical for activists in launching social movements like #MeToo and #BlackLivesMatter. The largest “big room” networks can serve as directories for people’s online presence, helping old friends connect, as well as helping people discover people from other parts of the world.

Further reading

Nanjala Nyabola in The Nation on the value of big room networks

Ethan Zuckerman in Prospect on what we’d lose in a Twitter-free world

But big room social networks have some serious drawbacks. Because so many people share the same “space”, these networks are particularly susceptible to spam. (Spammers want everyone’s attention!) They are particularly vulnerable to harassment, because you are sharing a “room” with people who may not share your norms and values.

And perhaps most importantly, these spaces have a single code of conduct administered by a central entity. Arguments over whether Donald Trump should be allowed on Twitter or Facebook can take on enormous political importance and weight because being removed from the “big room” genuinely limits the ability of public figures to reach diverse audiences.

Group-Hosting / “Many Room” Social Networks

Centrally owned, multiple rule sets

Meares and Tyler have been particularly interested in implementing their approaches on another type of social network, ones where a single entity hosts many thousands of separate groups. This category includes Reddit, Facebook’s Groups product, and platforms like Nextdoor.

On the ownership side, a single company hosts many groups, each with their own space. Their key characteristic is that they’ve got a divided model of governance. Moderators have some control over the rules within their groups, but can be overruled by decisions that take effect across the platform.

Consider Reddit, where moderators often enforce local rules much stricter than those that apply across the platform: on r/science, comments that aren’t supported by peer-reviewed scientific research will be summarily deleted.

However, when subreddits get unruly, the platform might choose to quarantine them (anyone visiting that subreddit has to click through a warning page, and the subreddit’s contents aren’t shared with a sitewide “front page”) or ban them entirely.

Think of the platform’s rules as a “floor”—that’s the minimum set of rules any community can have on a “many room” network, but a specific room might have a much more involved set of regulations governing local behavior.

Group-hosting networks enable much more control over a conversation than big room networks. If you want to restrict conversation in a diabetes support group only to people who have type 1 diabetes—or a political group to people who identify as conservative—you can do so.

Being able to establish a set of rules and norms for communities—and to exclude people who consistently don’t follow those rules—may make discussions more productive and civil. These networks may be particularly appropriate for sensitive conversations where participants want privacy to discuss sensitive or personal issues.

This emphasis on privacy leads to one problem with group-hosting networks: limited discoverability. While everyone can find tweets on Twitter via search, you are not able to search within Facebook Groups unless you are a member of that group.

Different group-hosting networks handle this differently—most subreddits are indexed and you can find content even if you’ve never encountered the given community before. This can create a different problem—if you discover a post within a community via a search engine, you may not know or understand the local norms and rules to participate in the conversation.

A more fundamental problem with group-hosting networks is the conflict between local norms and platform-wide standards. A moderator may put hundreds of hours into work managing her community and still might find herself deplatformed if the behavior of the local community deviates substantially from the platform’s standards.

At best, these networks provide communities with spaces they wouldn’t otherwise have online. At worst, they benefit (through ad sales and through collection of behavioral information) from communities who can be thrown off the platform without notice or recourse.

The Fediverse

Decentralized ownership, central rules (more or less)

While most internet users heard of the Fediverse for the first time in 2022, with Mastodon as an alternative to an Elon Musk-run Twitter, experiments with decentralized but compatible social networks have been underway since 2008.

Mastodon is a Fediverse service that supports “microblogging”, much like Twitter. Less widely used Fediverse services support other behaviors: Pixelfed enables image sharing, while PeerTube and Funkwhale replicate much of YouTube’s functionality.

The key difference between Fediverse services and the “big room” services they provide alternatives to is about the ownership and governance of servers. Elon Musk owns Twitter, and can make decisions about who can maintain an account on the service and what content will be permitted. As we recently discovered, apparently he can also change the algorithms to ensure we all see every one of his brilliant and well-thought-through tweets.

But you—if you are somewhat technically sophisticated and can afford a modest server hosting fee—can run your own Mastodon node and set and enforce your own rules. Your conversations don’t have to be limited to the friends you invite to your server—by default, the conversations on your server are “federated” with all other Mastodon servers.

Federation is a powerful strategy for creating the benefits of “big room” social networks while avoiding one of the critical downsides: the power of a single administrator. Mastodon servers can and do have different rules. Some allow commercial advertising, some ban adult content or require NSFW imagery be blurred until you click on it. It’s wise to choose a Mastodon instance whose rules and norms align with your preferred way of using social media.

Not only can Fediverse nodes set their own rules, they can choose who to federate with and who not to exchange messages with. In the past, this has allowed communities with controversial views to coexist on Mastodon alongside communities who would want to ban their content.

Early in the evolution of Mastodon, fans of lolicon (anime-style imagery depicting children and teenagers) found themselves deplatformed from Twitter and turned to Mastodon as an alternative. Many Mastodon servers chose not to federate with these lolicon-friendly instances. The result: if you accessed Mastodon from a lolicon-friendly server, you’d see this content, and otherwise, you would not.

Further reading

Ethan Zuckerman’s personal blog on the rise of Mastodon in Japan

The ability to defederate is a feature, not a bug—it allows the Fediverse to serve as a “big room” much of the time, but split into different big rooms over key content issues. But defederation puts a great deal of power in the hands of a server administrator.

In a dispute about whether certain rhetoric is transphobic, some Mastodon instances have defederated from a new host, journo.net, because users of that site had commented on “anti-trans” content. In at least one case, the content in question was a New York Times article, which is the sort of thing journalists comment on. Whether or not this was the correct moderation decision, it was an illustration of the sorts of governance problems possible in the Fediverse.

Unless you run your own Fediverse node, you are subject to decisions your node administrator makes. Some nodes have a strong sense of community and decisions about rules and federation are made within discussion groups. Others work much as Twitter does, but run by someone who’s not Elon Musk. And the reputation of your Fediverse node matters if you want your messages to reach the rest of the network—journo.net users may migrate to another server if they find people are unwilling to accept messages from their network.

In other words, federation is powerful, but can be very confusing. Understanding what rules are in play requires both full transparency from everyone setting rules, and a commitment to understand the system from people who use it. The Fediverse replicates much of what’s good about “big room” networks, but also retains serious problems around moderation, some of which may become more complex because there are simply more moving parts.

Very Small Online Platforms

Decentralized ownership, decentralized rules

Finally, it’s worth considering small, special purpose platforms that aren’t federated. We’ve been using the term VSOPs: Very Small Online Platforms. (It’s a joke—the EU refers to platforms like Twitter and Facebook as VLOPs, or Very Large Online Platforms.)

VSOPs are the opposite of “big room” social networks. They are social networks created for a very specific purpose, with rules, norms and affordances appropriate to that community.

My lab at UMass Amherst experiments with these sorts of small communities—our software Smalltown is a hacked version of Mastodon designed for a small-scale conversation about local civic issues. It doesn’t make much sense to connect our discussion of politics in Amherst, Massachusetts to other small cities, so the network doesn’t federate. Our project learned a great deal from Darius Kazemi’s Hometown project, which is designed for a community of 50-100 active users.

One of the best examples of a successful VSOP is a pretty large online community: Archive of Our Own. Created by participants in the fan fiction community—where authors write new stories using characters and worlds from established books, films and television shows—AO3 is used by millions of users a week. The site, which runs on its own software, has affordances specific to the fanfic community, including a careful system for labels and warnings about sensitive content.

Further reading

Casey Fiesler’s personal blog on the feminist HCI and values design within AO3

Ethan Zuckerman in the Journal of E-Learning and Knowledge Society on how social media could teach us to be better citizens

VSOPs can build tools and practices appropriate to their communities and purposes. Our Amherst Smalltown site provides a great deal of information about local issues so that people can participate in conversations from a well-informed basis—we refer to this as “scaffolding” the conversation. Other networks could include affordances for self-governance, like robust systems for voting on policy decisions. We believe exploring VSOPs as a way of exploring diverse systems of community governance could be good not only for the future of social media, but as a form of education in democratic citizenship.

Before everyone abandons big room social networks and builds their own VSOP, it is worth noting the serious limitations of this method of social networking. It requires significant effort to scaffold healthy conversations, no matter the purpose and community being served. These networks can easily become ghost towns if they don’t meet a real need. Even if these networks do thrive, there are serious problems with discoverability—because these networks don’t federate, their contents are by default only open to members of the community. Making these networks searchable is a significant technical and conceptual problem.

Not One, But Many

Advocating for a pluriverse

The goal of this taxonomy is not to argue for the superiority of one of these models over any other. There are benefits and shortcomings of each type of social network, and it’s possible to imagine use cases for each type of platform.

Rather than arguing for one model—federation, for example—to displace existing big room networks, we advocate for a pluriverse, a complex world of interoperable social networks where one can choose a technical architecture and governance structure appropriate to a community’s needs.

Understanding how different networks in a pluriverse are governed, and the implications of those constraints for different communities is a critical first step in designing better social media futures. 🌳

Thanks for reading. See you back here in two weeks! Have a great holiday weekend.

Savoring one last heart-shaped chocolate,

Paul

Great taxonomy, clarifies many issues and possibilities that are widely conflated and misunderstood!

For a complementary perspective that is also very relevant, consider the model of online conversation in From Freedom of Speech and Reach to Freedom of Expression and Impression (https://techpolicy.press/from-freedom-of-speech-and-reach-to-freedom-of-expression-and-impression/).

The forgotten (and now threatened) right of Freedom of Impression affects all four of the models in Ethan’s taxonomy with regard to their different paradigms for reach and control -- and how the three different levers of mediation and who controls them apply (censorship, friction, and selectivity, as shown in my second diagram).

In the spirit of that Though as a Social Process cycle, I will be thinking further about how Ethan’s taxonomy can add richness to my framing of Thought => Expression => Social Mediation => Impression => further thought…

Ethan’s taxonomy – and the “pluriverse,” “a complex world of interoperable social networks where one can choose a technical architecture and governance structure appropriate to a community’s needs” -- also ties in with to the ideas of interlinked webs of digital “hypersquares” (or “hyperspheres”/”hyperspaces”) suggested in Community and Content Moderation in the Digital Public Hypersquare (https://techpolicy.press/community-and-content-moderation-in-the-digital-public-hypersquare/, with Chris Riley).

I feel that this taxonomy - and the major tech platforms themselves - would benefit from the classical legal distinction between 'malum in se' and 'malum in prohibitum', or 'bad in itself' and 'bad in prohibition'.

Certain things can be understood as bad in themselves because wherever these rules are discarded or not enforced sufficiently those people subject to the rules, assuming they wish to maintain the integrity of the group or community, will come back to those precise rules irregardless of local context. Murder, for example, is fairly universal for societies of any scale. Racism is also probably malum in se, since it is explicitly an act against integration and shared or aligned purposes.

But other rules are contextually sensitive, and would not automatically be the rules that any community would come to. For example, you might say the word 'idiot' is forbidden, given that it is racist (associating people whose native language is not English with stupidity), and racism would seem to be a fairly low level thing to forbid. But since it is a word its meaning may have evolved, and as such the people who use it may not have any knowledge of its roots. It is not clear that every community whose aim is to come into confluence would forbid it.

A "big room" social network can separate communities into 'spaces' based upon topics or interests, or value systems, and therefore set 'ground-rules' (malum in se), while those spaces layer their own local contextual rules on top.

By nesting these spaces such that all of the content in the niches also gets published into the shared spaces that contain them, where those who achieve the most amplification are the users who obtain reputation by peer endorsement from multiple orthogonal communities (bridge builders), it is plausible to build an architecture with all of the benefits of federated and 'big room' social networks, without the major drawbacks of either.

It is also important that the 'ground rules', central to which is the core algorithm, is open sourced and totally transparent about how content is amplified or suppressed wherever it gets published.